Hi,

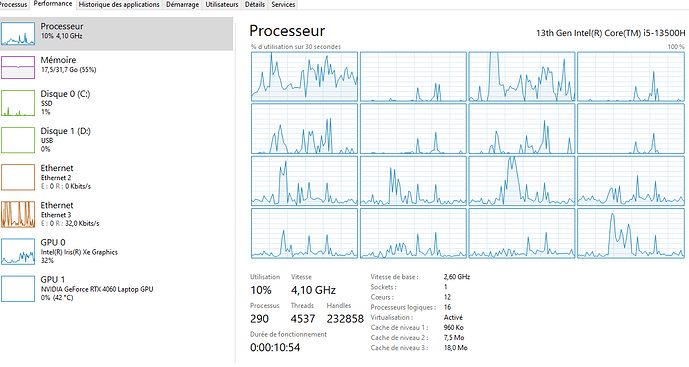

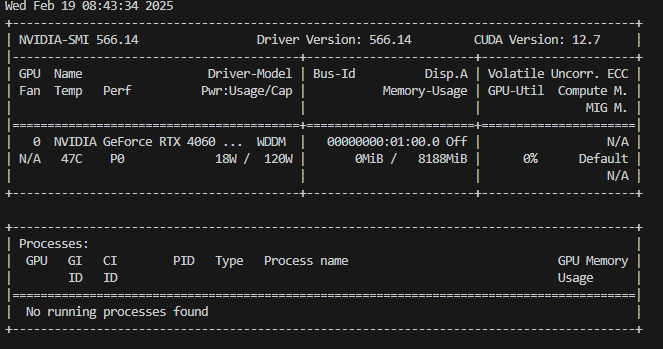

I have got the setup described in the image.

Either on windows or wsl2. altough the cuda is available, I don’t manage to run llm inference on gpu.

It looks like the models are loaded on gpu memory, but It seems using cpu for inference.

Is there a way to monitor gpu activity ?

I setted up the pip with extra url cuda torch version