Hello, I’m new to PyTorch and bit confused about exactly what im building,

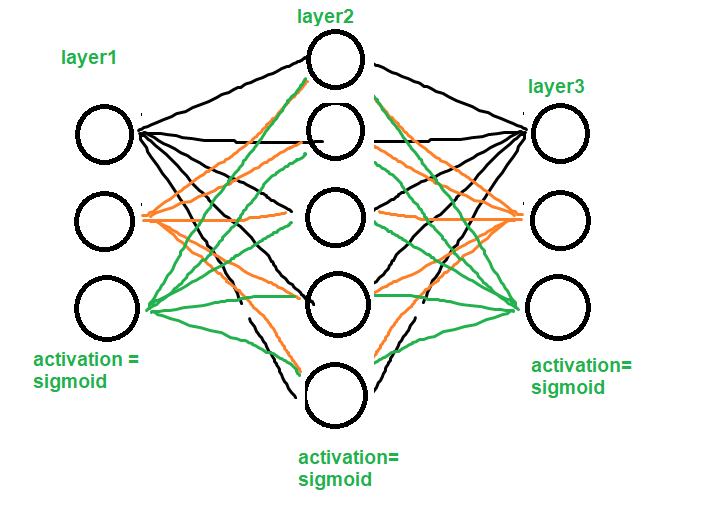

so far I’ve never used any ML framework before and my knowledge is based on theory only. I’m learning PyTorch and got the idea of auto-grad and various other stuff however now that its time to build actual stuff, im facing a new issue that is, im not sure how do i exactly build a neural network with specific design, look at the image :

Okay, so as you can see, suppose i want to build above mentioned neural network (see pic), now my problem is, when i see PyTorch documentation regarding various layers that i can use, i see Linear layer which i believe is what i need to use to build my desired architecture however there is no way to specify non-linear activation function with it,

then i explored more and found “activation functions” (note : NOT LAYERS) and im now confused, sigmoid function doesn’t seem to have same constructor as linear layer where i can specify shape of input and output specifically like in case of Linear Layer constructor

now, what do i do ? does PyTorch want me to use both Linear Layers and Activation function separately , like this ?

class xyz(Module):

self.layer1 = torch.nn.Linear(in_features, out_features)

self.layer1_1 = torch.nn.Sigmoid()

.....

def forward(self, inp):

output1 = self.layer1(...) #ignore parameters for now

output1_after_activation = self.layer1_1(output1)

...

... #above code repeated 2 more times for remaining 2 layers

am i correct or i got it all wrong ?