Using a vgg16() pretrained model

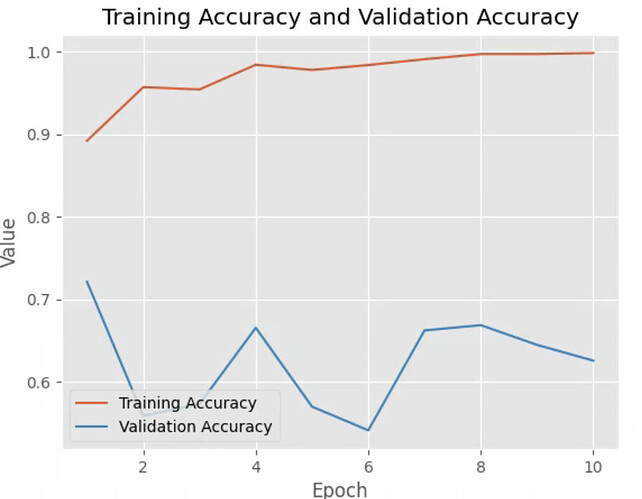

I am trying to solve an image classification problem with 3 classes. However, after trying to fine-tune and collect more images to train and test, my training/testing accuracy and training/testing loss does not seemed to be improving and there seemed to be an overfitting issue here. Is anyone able to advice?

Results

Data augmentation technique

transform = transforms.Compose([

transforms.Grayscale(num_output_channels=3),

transforms.Resize(image_size),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

# transforms.ColorJitter(brightness=0.2, contrast=0.2, saturation=0.1, hue=0.1),

# transforms.RandomAffine(degrees=40, translate=None, scale=(1, 2), shear=15, interpolation=False, fill=0),

transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225)),

# transforms.Lambda(lambda x: x[0]) # remove color channel

])

My model training function

def train_model(model, dataloaders, criterion, optimizer, scheduler,epochs=3):

since = time.time()

best_model_wts = copy.deepcopy(model.state_dict())

best_acc = 0.0

# Initialize lists to store loss and accuracy values

train_losses = []

val_losses = []

val_accuracies = []

train_accuracies = []

predicted_labels = []

true_labels = []

total = 0

correct = 0

softmax_probs = []

for epoch in range(epochs):

print(f'Epoch {epoch}/{epochs - 1}')

print('-' * 10)

for phase in ['train', 'val']:

if phase == 'train':

model.train() # set model to training mode

else:

model.eval() # set modelt o evaluation mode

running_loss = 0.0

running_corrects = 0

# Iterate over data

for inputs, labels in dataloaders[phase]:

inputs, labels = inputs.to(device), labels.to(device)

# zero te parameter gradients

optimizer.zero_grad()

# forward

# track history if only in train

with torch.set_grad_enabled(phase == 'train'):

outputs = model(inputs)

_, preds = torch.max(outputs, 1)

loss = criterion(outputs, labels)

softmax_output = F.softmax(outputs, dim=1)

softmax_probs.extend(softmax_output.detach().cpu().numpy())

# backward + optimize only if in training phase

if phase == 'train':

loss.backward()

optimizer.step()

# statistics

running_loss += loss.item() * inputs.size(0)

running_corrects += torch.sum(preds == labels.data).cpu()

epoch_loss = running_loss / len(dataloaders[phase].dataset)

epoch_acc = running_corrects.double() / len(dataloaders[phase].dataset)

print(f'{phase} Loss: {epoch_loss:.4f} Acc: {epoch_acc:.4f}')

if phase == 'train':

# Append the loss and accuracy values for each epoch

scheduler.step()

train_losses.append(epoch_loss)

train_accuracies.append(epoch_acc)

else:

# Append the loss and accuracy values for validation set

val_losses.append(epoch_loss)

val_accuracies.append(epoch_acc)

# deep copy the model

if phase == 'val' and epoch_acc > best_acc:

best_acc = epoch_acc

best_model_wts = copy.deepcopy(model.state_dict())

print()

time_elapsed = time.time() - since

print(f'Training complete in {time_elapsed // 60:.0f}m {time_elapsed % 60:.0f}s')

print(f'Best val Acc: {best_acc:4f}')

model.load_state_dict(best_model_wts)

num_images = 5 # Number of images to visualize

pdb.set_trace()

# Get a random subset of images and corresponding softmax probabilities

indices = np.random.choice(len(dataloaders['val'].dataset), num_images, replace=False)

sample_images = [dataloaders['val'].dataset[i][0] for i in indices]

sample_probs = [softmax_probs[i.item()] for i in indices if i.item() < len(softmax_probs)]

# testing loop

model.eval()

with torch.no_grad():

for inputs, labels in dataloaders['val']:

images, labels = inputs.to(device), labels.to(device)

outputs = model(images)

# outputs = model.forward(images)

# batch_loss = criterion(outputs, labels)

# ps = torch.exp(outputs)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += torch.sum(predicted == labels.data)

predicted_labels.extend(predicted.cpu().numpy())

true_labels.extend(labels.cpu().numpy())

print('Accuracy of the network on the 628 test images: %d %%' % (

100 * correct / total))

target_names = ['covid', 'pneumonia', 'regular'] # Add your own class names

report = metrics.classification_report(true_labels, predicted_labels, target_names=target_names)

print(report)

return model

Hyperparameters

- Epochs: 10 (previously tried higher epochs, but training stabilised after 10 epochs)

- Batch size = 8

- Learning rate = 0.01

- Optimizer = Adam

- Criterion = CrossEntropyLoss

- model = models.vgg16(weights=‘IMAGENET1K_V1’)