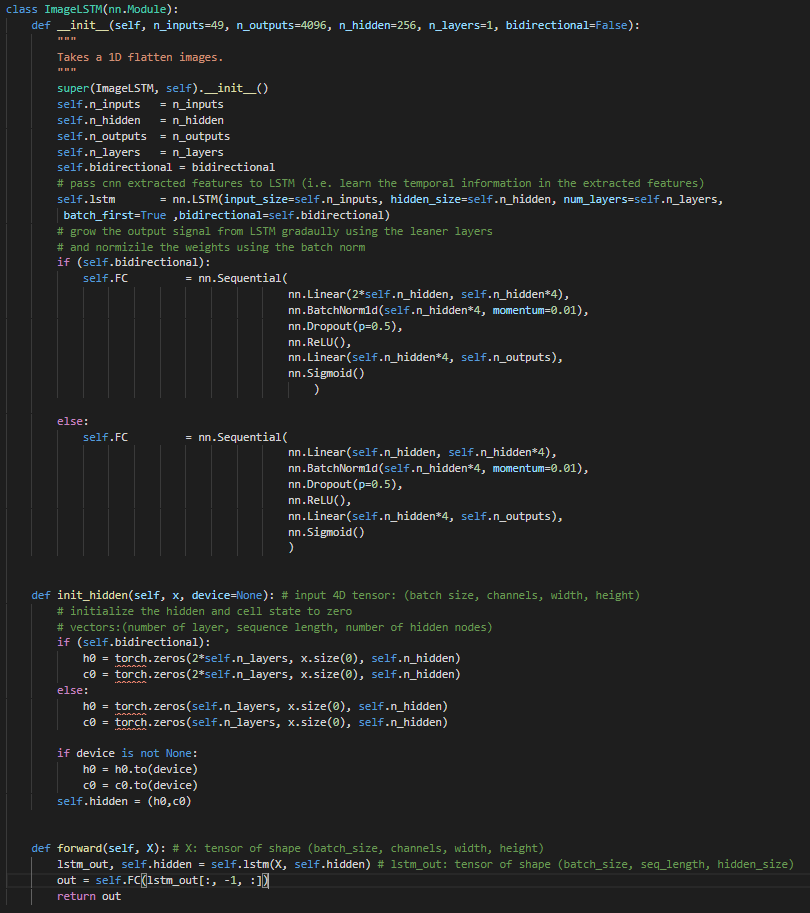

I want to use a LSTM to generate images, I have inputs images of (30,2,32,32) and target images of (30,1,32,32), How should I structure my data such I will be able generate images using the following architecture ? Or is it even possible to use this simple architecture to get reasonable results?

To generate images I would use a GAN over a LSTM.

Take a look at these GANs for image Generation.

The image I’m generating are a sequence, that is why I’m using an LSTM, but I will have a look the GAN repo thanks.

GAN framework may be used with almost any kind of neural networks. Take a look at this papers for example:

- Continuous recurrent neural networks with adversarial training https://arxiv.org/abs/1611.09904

- Real-valued (Medical) Time Series Generation with Recurrent Conditional GANs https://arxiv.org/abs/1706.02633

- Language Generation with Recurrent Generative Adversarial Networks without Pre-training https://arxiv.org/abs/1706.01399

- Contextual RNN-GANs for Abstract Reasoning Diagram Generation https://arxiv.org/abs/1609.09444

First, it works for sure.

Second, even though I’m unsure of this process, I think you can view the image generating lstm as the decoder lstm from Seq2Seq from NLP. https://pytorch.org/tutorials/intermediate/seq2seq_translation_tutorial.html#sphx-glr-intermediate-seq2seq-translation-tutorial-py Your current approach might work as well; you can test the result different approaches.

Third, you don’t have to initialize the hidden states to zeros manually, Pytorch lstm does that automatically.

Thank you very much for your help.

What do you mean by " it works for sure" you mean for my specified problem or it work in general?

Generating an image step by step using an lstm can be generalized to a sequence generating problem, and lstm is a common choice for generating sequences.

How would you configure your data if you had a inputs data set with the following dimension (batch_size=256, channels=2, height=64, width=64) to match the LSTM input format (sequence_length, batch_size, input_size) ?

You would first have to convert the image into a series of data; for example, flatten the image in some way(or flatten it using Unfold). Then reshape the tensor into the shape that match the input.

I am also trying to generate new images from a sequence of images using LSTM to learn and create future images. Please let me know if LSTM worked for you or if you used something else ?