Hi

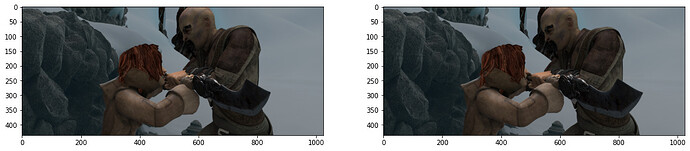

The code I’m using to warp the image is

B, C, H, W = image_B.size()

xx = torch.arange(0, W).view(1,-1).repeat(H,1)

yy = torch.arange(0, H).view(-1,1).repeat(1,W)

xx = xx.view(1,1,H,W).repeat(B,1,1,1)

yy = yy.view(1,1,H,W).repeat(B,1,1,1)

grid = torch.cat((xx,yy),1).float()

vgrid = grid + flow_AB

vgrid[:,0,:,:] = 2.0*vgrid[:,0,:,:].clone() / max(W-1,1)-1.0

vgrid[:,1,:,:] = 2.0*vgrid[:,1,:,:].clone() / max(H-1,1)-1.0

warped_image = torch.nn.functional.grid_sample(image_B, vgrid.permute(0,2,3,1), 'nearest')

I’ve also tried creating a flow explicitly using image processing techniques

img2 = cv2.imread('frame_0011.png',0)

img1 = cv2.imread('frame_0010.png',0)

flow = cv2.calcOpticalFlowFarneback(img2, img1, None, 0.5, 3, 15, 3, 5, 1.2, 0)

image2 = cv2.imread('frame_0011.png',1)

plt.imshow(cv2.remap(image2, flow, None, cv2.INTER_LINEAR))

But it doesn’t hold up

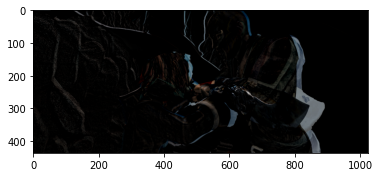

This is the difference with the image I want to warp to

I don’t get why it’s not working. Could anyone please help me?