Hi everyone second post here!

I got this error

TypeError: linear(): argument 'input' (position 1) must be Tensor, not int

This is my code - it runs pretty slow

import torch

import torch.nn as nn

import torchvision

import torchvision.transforms as transforms

from torch.utils.data import DataLoader

import numpy as np

from PIL import Image

import time

start = time.time()

transform = transforms.Compose([transforms.Resize(255), transforms.CenterCrop(224), transforms.ToTensor(),])

# This gives us some transforms to apply later on

training = torchvision.datasets.ImageFolder(root = "training_set", transform = transform)

print(training)

train_dataloader = DataLoader(dataset = training, shuffle = True, batch_size = 32)

print(train_dataloader)

print("Before NN creation",start-time.time())

# Creating the model

class NeuralNetwork (nn.Module):

def __init__(self):

super(NeuralNetwork, self).__init__()

self.Linear_Stack = nn.Sequential(

nn.Linear(224*224, 40000),

nn.ReLU(),

nn.Linear(40000, 10000),

nn.ReLU(),

nn.Linear(10000, 75),

nn.ReLU(),

nn.Linear(75, 5),

nn.ReLU(),

nn.Linear(5, 1),

nn.LogSoftmax(dim=1)

)

def forward (self, x):

logits = self.Linear_Stack(x)

return logits

model = NeuralNetwork()

print(model)

epochs = 2

lr = 0.001

loss = nn.CrossEntropyLoss()

optim = torch.optim.SGD(model.parameters(), lr = lr)

print(loss, optim)

#creating the main loop

def main(loader, model, loss, optim):

size = len(loader.dataset)

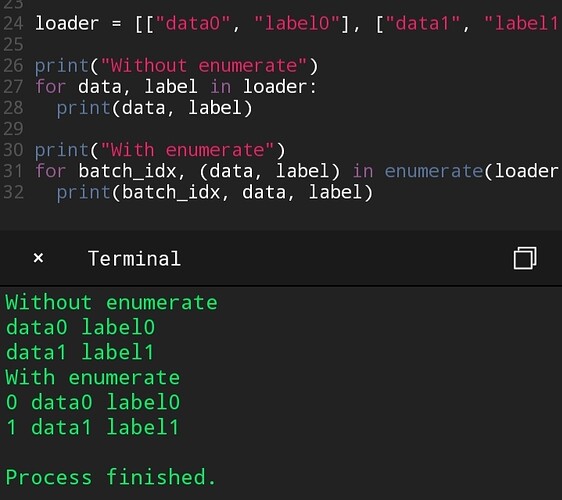

for (images, labels) in enumerate(loader):

# Prediction

pred = model.forward(images)

loss = loss(pred, target)

#backprop

optim.zero_grad()

loss.backward()

optim.step()

print(loss)

for x in range(epochs):

main(train_dataloader, model, loss, optim)

print(start-time.time())

I guess main question is: Am I using Image Folder correctly? (I know it works because I get the correct amount of datapoints when I print it) Is my code even succinct and I couldn’t find anything on what the variable names for the outputs are (like X, y or Sample, Target). I think that answering these questions will help me solve my question, reply if you have anything else that you think will be helpful and I will take it onboard.