I’m training a language model using the code here: examples/word_language_model/main.py at main · pytorch/examples · GitHub

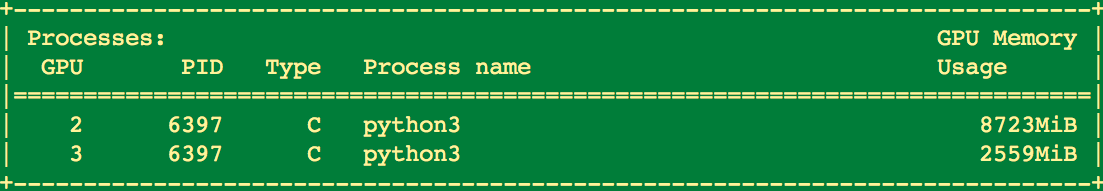

I have made some slight changes so that the model can be trained across multiple GPUs. However, the GPU memory usage is extremely imbalanced.

I can understand that one GPU is set to gather and store all outputs. I wonder if there is any way I can balance the memory usage? Or can I set one GPU for gathering outputs and the rest for training on batches?

Thanks,

Yuzhou