C++ Optimizer header link

#--------------------------------------------------------------------------------------------------------------------------

#--------------------------------------------------------------------------------------------------------------------------

Hi pytorch team

I really want to train NFNet model(Nomalization Free series and my custom model) in C++

but the problem is that here is no official AGC optimizer in libtorch (Adaptive Gradient Clipping)

reference link : [2102.06171] High-Performance Large-Scale Image Recognition Without Normalization

reference pytorch code link : DeepLearningStudy/torch/util/nf_helper.py at main · gellston/DeepLearningStudy · GitHub (target AGC python class)

That is why i made some question list for AGC implementation

(FYI : i am c++ developer and i know basic c++ container like std::vector, std::map , blabla )

-

Is there simple optimizer inheritance tutorial code for libtorch C++?

-

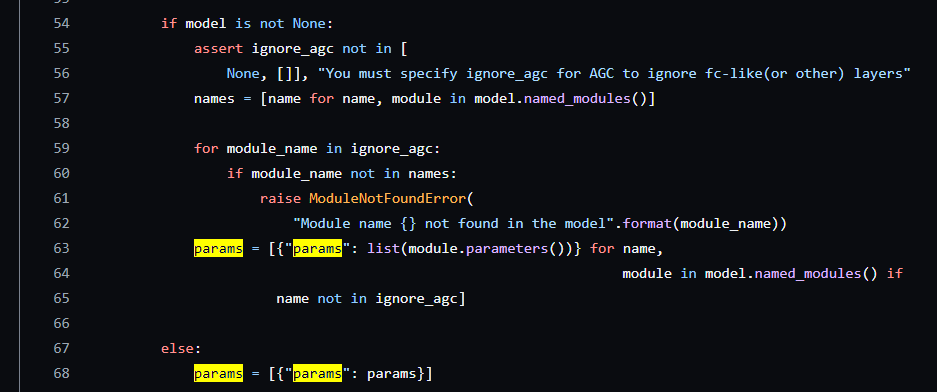

how to unpack params of optimizer argument and pack again in C++? (for skipping specific parameter)

(DeepLearningStudy/torch/util/nf_helper.py at e51b11565e924becc39355bf712c398902bc323a · gellston/DeepLearningStudy · GitHub) -

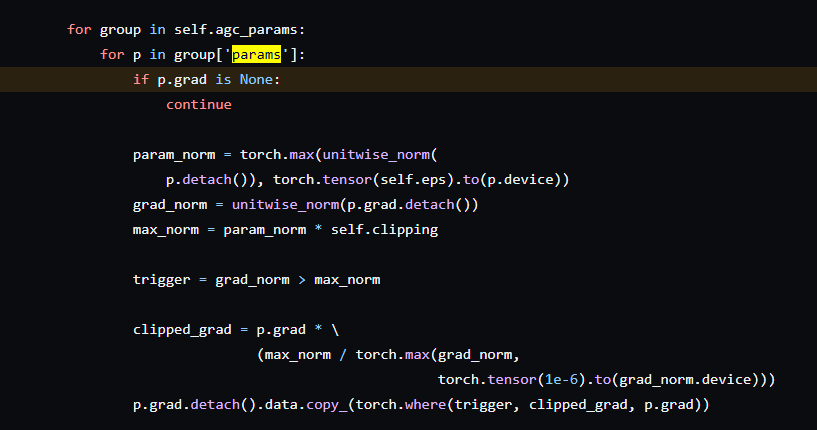

how can i get p, p.grad in libtorch

(DeepLearningStudy/torch/util/nf_helper.py at e51b11565e924becc39355bf712c398902bc323a · gellston/DeepLearningStudy · GitHub) -

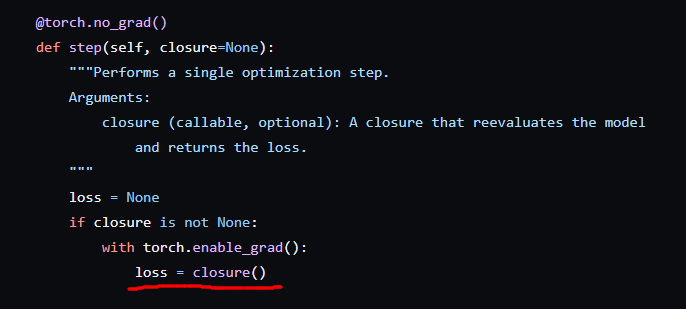

how to calculate total model loss in step function C++ (like closure)

DeepLearningStudy/torch/util/nf_helper.py at e51b11565e924becc39355bf712c398902bc323a · gellston/DeepLearningStudy · GitHub -

if there is easy way to implement AGC (without inheritance optimizer) please share