def encoder():

enc = keras.Sequential()

enc.add(Input(shape=(maxlen,), name='Encoder-Input'))

enc.add(Embedding(num_words, embed_dim,input_length = maxlen, name='Body-Word-Embedding', mask_zero=False))

enc.add(Bidirectional(LSTM(128, activation='relu', name='Encoder-Last-LSTM')))

return enc

Here I get output of shape Batch x 256

In pytorch:

class EncoderRNN(nn.Module):

def __init__(self):

super(EncoderRNN, self).__init__()

self.embedding = nn.Embedding(vocab_size, embed_dim)

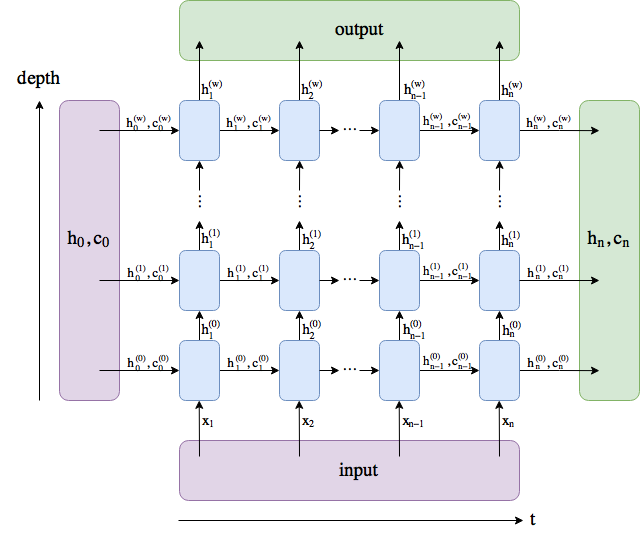

self.lstm = nn.LSTM(embed_dim, embed_dim, 2, bidirectional=True, batch_first=False)

self.relu = nn.ReLU()

def forward(self, input_, hidden, c):

embedded = self.embedding(input_).transpose(0, 1)

output, (hn, cn) = self.lstm(embedded, (hidden, c))

output = self.relu(output)

return out, hn, cn

def initHidden(self):

return torch.zeros(2*2, 16, 128, device=device), torch.zeros(2*2, 16, 128, device=device)

But here I get output of shape: sequence length x batch x 256 and I want to get batch x 256

How to implement the exact code of tensorflow to pytorch?