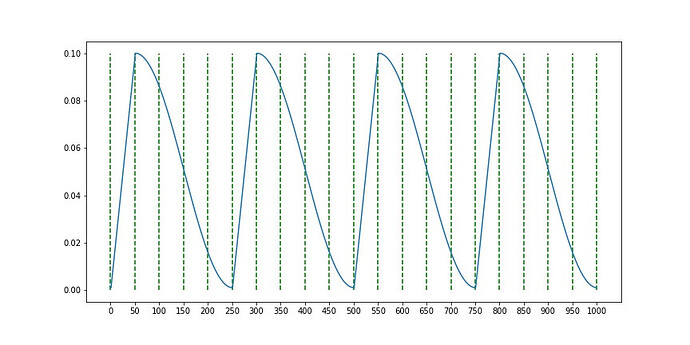

Hi there, I am wondering that if PyTorch supports the implementation of Cosine annealing LR with warm up, which means that the learning rate will increase in the first few epochs and then decrease as cosine annealing. Below is a demo image of how the learning rate changes.

I only found Cosine Annealing and Cosine Annealing with Warm Restarts in PyTorch, but both are not able to serve my purpose as I want a relatively small lr in the start.

I would be grateful if anyone gave me advices or instructions.

Ignite has these:

create_lr_scheduler_with_warmup

ConcatScheduler