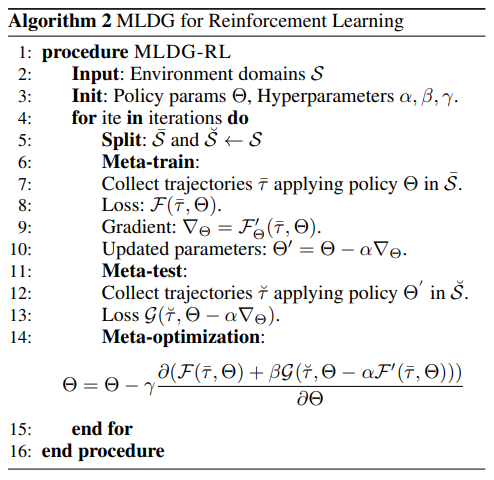

I’m trying to implement the following algorithm ([1710.03463] Learning to Generalize: Meta-Learning for Domain Generalization)

The best approach I was able to come up with was the following pseudocode:

STEP:

# 1. Copy the train model

model_meta_test.load_state_dict(model_train.state_dict())

optimizer_meta_test.load_state_dict(optimizer_train.state_dict())

# Caluclate loss on the meta test model (A)

loss_meta_test = self.get_loss(model_meta_test)

# Optimize the meta test model

self.optimize_network(loss_meta_test, model_meta_test, optimizer_meta_test)

# Now I'm at ϴ' which means I can calculate the loss of my META TEST

loss_meta_test = self.get_loss(model_meta_test)

# Now I need to recalculate the loss on the Train Model (B).

# Note that the loss at (A) and (B) should be the same.

loss_train = self.get_loss(model_train)

# Now I calculate the sum of both losses.

tot_loss_train = loss_train + self.BETA * loss_meta_test.detach()

# Finally Optimize the Train Model

self.optimize_network(tot_loss_train, model_train, optimizer_train)

I have a few doubts.

- Is the implementation is correct.

- What would be a more efficient way of doing it? This is an overkill of calculations as I have to backup models and calculate the same loss twice for different models. With my limited knowledge, this was the best I could come up with. If anyone can enlighten me, I would greatly appreciate it.