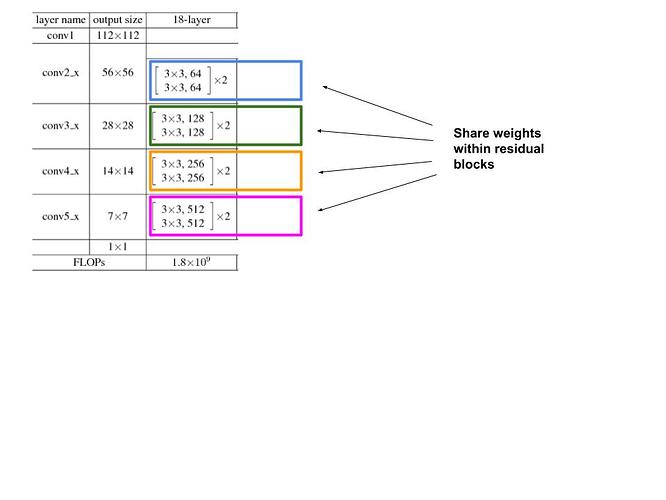

I’m trying to implement resnet with shared weights within each residual block. In the forward function of each block, I’m implemented the forward function twice for the conv2d layer. However, I’m facing an issue of the change between the number of filters between blocks. The conv2d which has an input of 64, outputs 128 and cannot be repeated in the forward function. What’s another way to implement shared weights of the convolution within the residual blocks?

What do you mean.

If you have 64 filters how do you plan to share 128? Could you provide more details?

So, the shared weights is only within the conv2_x or conv3_x or conv4_x. The two conv layers within the same residual blocks would share weights. Similar to what’s done in [this paper] or this paper. (https://arxiv.org/pdf/1604.03640.pdf).

Basically to share weights you have to call the same instance as many times as you want.

I think you are aware of that.

If you want to share part of the weights you can define the weights as nn parameters and call the convolution functional (you can concat weights or be as flexible as you want).

If you change the number of filters indeen you have to decide which weights are shared manually.