Hello,

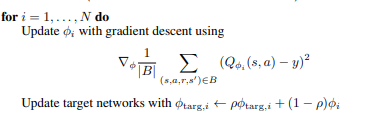

Im training an ensemble of networks and wanted to know if there are some possible improvements in the code that i wrote in terms of speed following this scheme:

so I have an ensemble of N(=10) networks with N target networks.

calculating the loss is quite easy but then i have to do the backward pass… currently my schedule looks like this:

# Compute critic losses and update critics

for critic, optim, target in zip(self.critics, self.optims, self.target_critics):

Q = critic(states, actions).cpu()

Q_loss = F.mse_loss(Q, Q_targets)

# Update critic

optim.zero_grad()

Q_loss.backward()

optim.step()

# soft update of the targets

self.soft_update(critic, target)

Especially concerning for me is the need of also 10 optimizers, is there an easier way to do it?

Thanks a lot!