How do I improve validation accuracy ? I am using a Conv3d the shape of the data is such (1,1,20,256,265) batch_size = 1.

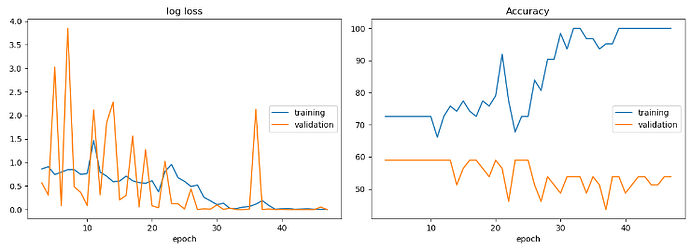

I am getting the following result:

I tried adding dropout layers but they don’t help much in this case. Can this be the result of fewer data in validation set? Dataset is already unbalanced.

code:

class CNNModel(nn.Module):

def __init__(self):

super(CNNModel, self).__init__()

# Convolution 1

self.cnn1 = nn.Conv3d(in_channels=1, out_channels=20, kernel_size=5, stride=1, padding=2)

self.relu1 = nn.ReLU()

self.dropout1 = nn.Dropout(p=0.1)

# Max pool 1

self.maxpool1 = nn.MaxPool3d(kernel_size=2)

# Convolution 2

self.cnn2 = nn.Conv3d(in_channels=20, out_channels=40, kernel_size=5, stride=1, padding=2)

self.relu2 = nn.ReLU()

self.dropout2 = nn.Dropout(p=0.1)

# Max pool 2

self.maxpool2 = nn.MaxPool3d(kernel_size=2)

#Convolution 3

self.cnn3 = nn.Conv3d(in_channels=40, out_channels=60, kernel_size=5, stride=1, padding=2)

self.relu3 = nn.ReLU()

self.dropout3 = nn.Dropout(p=0.2)

# # Max pool 3

self.maxpool3 = nn.MaxPool3d(kernel_size=2)

# Dropout for regularization

self.dropout5 = nn.Dropout(p=0.5)

# Fully Connected 1

self.fc1 = nn.Linear(122880, 3)

def forward(self, x):

# Convolution 1

out = self.cnn1(x)

out = self.relu1(out)

out = self.dropout1(out)

# Max pool 1

out = self.maxpool1(out)

# Convolution 2

out = self.cnn2(out)

out = self.relu2(out)

out = self.dropout2(out)

# Max pool 2

out = self.maxpool2(out)

# Convolution 3

out = self.cnn3(out)

out = self.relu3(out)

out = self.dropout3(out)

# Max pool 3

out = self.maxpool3(out)

# # Max pool 2

# out = self.maxpool4(out)

# # Resize

out = out.view(out.size(0), -1)

# print('flattening', out.size())

# Dropout

out = self.dropout5(out)

# Fully connected 1

out = self.fc1(out)

return out

model = CNNModel()

liveloss = PlotLosses()

model.to('cuda:0')

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)

for epoch in range(num_epochs):

logs = {}

total_correct = 0

total_loss = 0

total_images = 0

total_val_loss = 0

model.train()

for i, (data, target) in enumerate(train_loader):

images = Variable(data).to('cuda:0')

labels = Variable(target).to('cuda:0')

optimizer.zero_grad()

# Forward propagation

outputs = model(images)

# Calculating loss with softmax to obtain cross entropy loss

# loss = criterion(outputs, labels)

loss = criterion(outputs, labels) #....>

# Backward prop

loss.backward()

# Updating gradients

optimizer.step()

# Total number of labels

total_images+= labels.size(0)

# Obtaining predictions from max value

_, predicted = torch.max(outputs.data, 1)

# Calculate the number of correct answers

correct = (predicted == labels).sum().item()

total_correct+=correct

total_loss+=loss.item()

logs['log loss'] = total_loss / total_images

logs['Accuracy'] = ((total_correct / total_images) * 100)

print('Epoch [{}/{}], Step [{}/{}], Loss: {:.4f}, Accuracy: {:.2f}%'

.format(epoch + 1, num_epochs, i + 1, len(test_loader), (total_loss / total_images),

(total_correct / total_images) * 100))