When using torch.compile in both default and max-autotune compilation modes, the results differ across machines with the same GPU and environment. However, when torch.compile is disabled, the results align perfectly. How can I troubleshoot this issue? Any help would be greatly appreciated.

How large are these differences and is one of the compiled outputs matching PyTorch eager mode?

Also, do you see these issues in the latest release?

How large are these differences and is one of the compiled outputs matching PyTorch eager mode?

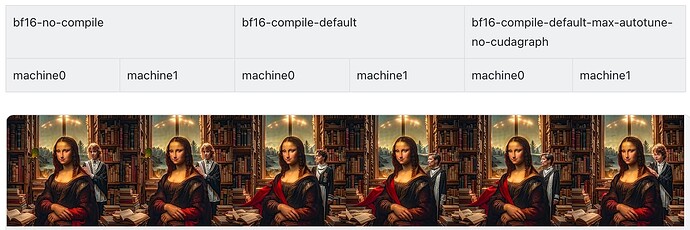

We are using the DiT model, and we found that when BF16 is used without enabling compile, different machines can produce identical images. However, after enabling compile, the results become uncontrollable. Even when we align the random seed and use an entirely consistent environment, we cannot obtain exactly the same images.

Also, do you see these issues in the latest release?

Yes, we have followed the issues below and made the corresponding settings, but we still cannot control the results with compile enabled.

torch._inductor.config.fallback_random = True

Here is our software and hardware information:

torch version: 2.6.0.dev20241104+cu124

torch cuda version: 12.4

torch git version: 76c297ad6b180015c5955e61357f43ab92d110ea

CUDA Info:

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2024 NVIDIA Corporation

Built on Thu_Jun__6_02:18:23_PDT_2024

Cuda compilation tools, release 12.5, V12.5.82

Build cuda_12.5.r12.5/compiler.34385749_0

GPU Hardware Info:

NVIDIA L20 : 2

How can I troubleshoot this issue? Any help would be greatly appreciated.

The Troubleshooting Guide might be a good start allowing you to find the smallest subgraph which reproduces accuracy issues between eager mode and the compiled model.