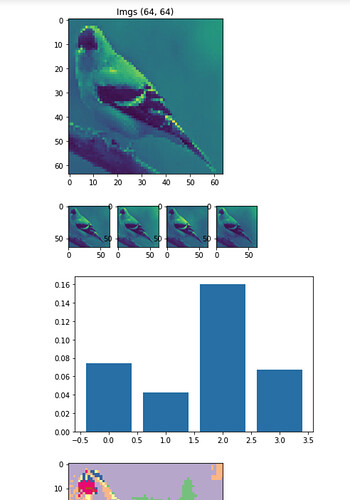

I launched my project in a year and had next error. I used a pic with IMG_SIZE[64, 64].

What uses:

- Python 3.8.2

- Torch==1.7.1

- TorchVision==0.8.2

OS

- Mac OS 11.2.3

For image classification:

imgs = []

directory = 'photo'

files = os.listdir(directory)

for x in files:

files_dir = directory +'/'+ x

imgs_dir = os.listdir(files_dir)

for imgsX in imgs_dir:

img_dir = files_dir +'/'+ imgsX

img = cv2.imread(img_dir)

img = cv2.resize(img, (64, 64))

img = skimage.color.rgb2gray(img)

imgs.append(img)

Image size (64, 64)

My SOM class:

class SOM(nn.Module):

def __init__(self, input_size, out_size, lr=0.3, sigma=None):

'''

:param input_size:

:param out_size:

:param lr:

:param sigma:

'''

super(SOM, self).__init__()

self.input_size = input_size

self.out_size = out_size

self.lr = lr

if sigma is None:

self.sigma = max(out_size) / 2

else:

self.sigma = float(sigma)

self.weight = nn.Parameter(torch.randn(input_size, out_size[0] * out_size[1]), requires_grad=False)

self.locations = nn.Parameter(torch.Tensor(list(self.get_map_index())), requires_grad=False)

self.pdist_fn = nn.PairwiseDistance(p=2)

def get_map_index(self):

# Two-dimensional mapping function

for x in range(self.out_size[0]):

for y in range(self.out_size[1]):

yield (x, y)

def _neighborhood_fn(self, input, current_sigma):

'''e^(-(input / sigma^2))'''

input.div_(current_sigma ** 2)

input.neg_()

input.exp_()

return input

def forward(self, input):

'''

Find the location of best matching unit.

:param input: data

:return: location of best matching unit, loss

'''

batch_size = input.size()[0]

input = input.view(batch_size, -1, 1)

batch_weight = self.weight.expand(batch_size, -1, -1)

dists = self.pdist_fn(input, batch_weight)

# Find best matching unit

losses, bmu_indexes = dists.min(dim=1, keepdim=True)

bmu_locations = self.locations[bmu_indexes]

return bmu_locations, losses.sum().div_(batch_size).item()

def self_organizing(self, input, current_iter, max_iter):

'''

Train the Self Oranizing Map(SOM)

:param input: training data

:param current_iter: current epoch of total epoch

:param max_iter: total epoch

:return: loss (minimum distance)

'''

batch_size = input.size()[0]

#Set learning rate

iter_correction = 1.0 - current_iter / max_iter

lr = self.lr * iter_correction

sigma = self.sigma * iter_correction

#Find best matching unit

bmu_locations, loss = self.forward(input)

distance_squares = self.locations.float() - bmu_locations.float()

distance_squares.pow_(2)

distance_squares = torch.sum(distance_squares, dim=2)

lr_locations = self._neighborhood_fn(distance_squares, sigma)

lr_locations.mul_(lr).unsqueeze_(1)

delta = lr_locations * (input.unsqueeze(2) - self.weight)

delta = delta.sum(dim=0)

delta.div_(batch_size)

self.weight.data.add_(delta)

return loss

def save_result(self, dir, im_size=(0, 0, 0)):

'''

Visualizes the weight of the Self Oranizing Map(SOM)

:param dir: directory to save

:param im_size: (channels, size x, size y)

:return:

'''

images = self.weight.view(im_size[0], im_size[1], im_size[2], self.out_size[0] * self.out_size[1])

images = images.permute(3, 0, 1, 2)

save_image(images, dir, normalize=True, padding=1, nrow=self.out_size[0])

Training:

from ipywidgets import widgets

from IPython.display import display

niter = 20

m = 2 # output fields

n = 2

model = SOM(

input_size=img.shape[0] * img.shape[1] * 1,

out_size=(m, n, 1), sigma=1)

out = widgets.Output()

out_clusters = widgets.Output()

out_history = widgets.Output()

display(widgets.VBox([out, out_clusters, out_history]))

print("Start train")

X = torch.from_numpy(img)

model.train()

losses = []

for it in tqdm(range(niter), total=niter):

loss = 0

n_ = 0

for (X, ) in dataset:

loss += model.self_organizing(X.view(X.size()[0], -1), it, niter)

n_ += 1

if (it+1) % 5 == 0 or it == 0:

with out:

plt.figure()

buf = model.weight.view(64, 64, -1).argmax(2).float() / 10

out.clear_output(True)

plt.imshow(buf, cmap='Accent')

plt.title(f'{it+1}/{niter} iters (Error: {loss/n_})')

plt.show()

with out_clusters:

out_clusters.clear_output(True)

plt.figure()

for i in range(m*n):

plt.subplot(2, 5, i+1)

plt.imshow(model.weight.view(64, 64, -1)[..., i])

plt.show()

with out_history:

out_history.clear_output(True)

plt.figure(figsize=(10, 3))

plt.plot(list(range(len(losses))), (losses), 'b-')

plt.scatter(list(range(len(losses))), (losses))

plt.show()

losses.append(loss / n_)

Error:

---------------------------------------------------------------------------

IndexError Traceback (most recent call last)

/var/folders/y5/pchlt5l12gx5_65v0_bn_fcm0000gn/T/ipykernel_60901/2590886534.py in <module>

22 n_ = 0

23 for (X, ) in dataset:

---> 24 loss += model.self_organizing(X.view(X.size()[0], -1), it, niter)

25 n_ += 1

26 if (it+1) % 5 == 0 or it == 0:

/var/folders/y5/pchlt5l12gx5_65v0_bn_fcm0000gn/T/ipykernel_60901/671413781.py in self_organizing(self, input, current_iter, max_iter)

74

75 #Find best matching unit

---> 76 bmu_locations, loss = self.forward(input)

77

78 distance_squares = self.locations.float() - bmu_locations.float()

/var/folders/y5/pchlt5l12gx5_65v0_bn_fcm0000gn/T/ipykernel_60901/671413781.py in forward(self, input)

55 print(f"losses: {losses}")

56 print(f"bmu_indexes: {bmu_indexes}")

---> 57 bmu_locations = self.locations[bmu_indexes]

58

59 return bmu_locations, losses.sum().div_(batch_size).item()

IndexError: index 2169 is out of bounds for dimension 0 with size 4