sigma_x

February 13, 2020, 6:55pm

1

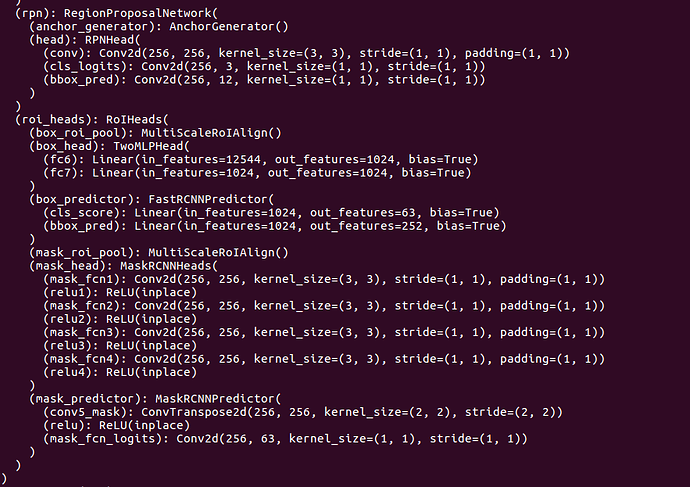

The image is size [3, H, W], there are a total 63 classes.

IF there are 5 objects in the image, labels are size [5], bounding boxes size [5,4], masks [5,H,W]. Why do I get this error message:

IndexError: The shape of the mask [14] at index 0 does not match the shape of the indexed tensor [14, 63, 28, 28] at index 1

Where does 14 come from? Here’s the architecture of the model, it seems correct and it worked on other problem. I don’t understand this error at all.

albanD

February 13, 2020, 7:08pm

2

Isn’t 14 your batch size?

sigma_x

February 13, 2020, 7:49pm

3

What do you mean? Theres 1 image with 5 objects/labels/bboxes/masks. I have no idea where 14 comes. 63 is the number of classes

albanD

February 13, 2020, 7:51pm

4

It seems like both the mask and the indexed Tensor have a size 14 for the 0th dimension. In neural network, that usually correspond to batch.

If it does not, could you make a small code sample that we can run that reproduces the issue?

sigma_x

February 13, 2020, 8:13pm

5

It cant be small, it’s pretty much the whole dataset + training script. The sizes of the labels are specified above. My other code for Mask rcnn doesn’t report error like this. I found a couple of masks with all 0s, that’s not what’s causing it.

To begin with i dont understand where 14 comes from, it’s not a number that pops up anywhere in my code. It’s something mask rcnn does internally, maybe fpn output? the intended output size of mask module during training is num-class×28×28.

sigma_x

February 13, 2020, 9:18pm

6

Alright, I found it! Nothing to do with sizes at all! Turns out, formats of tensors were incorrect. I changed to:

self.lab[‘labels’] = torch.tensor(labels, dtype=torch.int64 )torch.float )torch.uint8 )

Before that they all were uint8. Damn it!

1 Like

I am having the same issue when applying the loss function in this GitHub:

import torch

import torch.nn.functional as F

from collections import defaultdict

from torch import nn

from torch.nn import CrossEntropyLoss, NLLLoss

from torch.nn import Dropout

from transformers import BertConfig, BertModel, BertForMaskedLM

from typing import Any

class BertPretrain(torch.nn.Module):

def __init__(self,

model_name_or_path: str):

super(BertPretrain, self).__init__()

self.bert_model = BertForMaskedLM.from_pretrained(model_name_or_path)

def forward(self,

input_ids: torch.tensor,

mlm_labels: torch.tensor):

outputs = self.bert_model(input_ids, masked_lm_labels=mlm_labels)

show original

I have tried changing the types by no luck. what do you think the issue is

Screen Shot 2021-04-20 at 10.14.36 PM|690x254