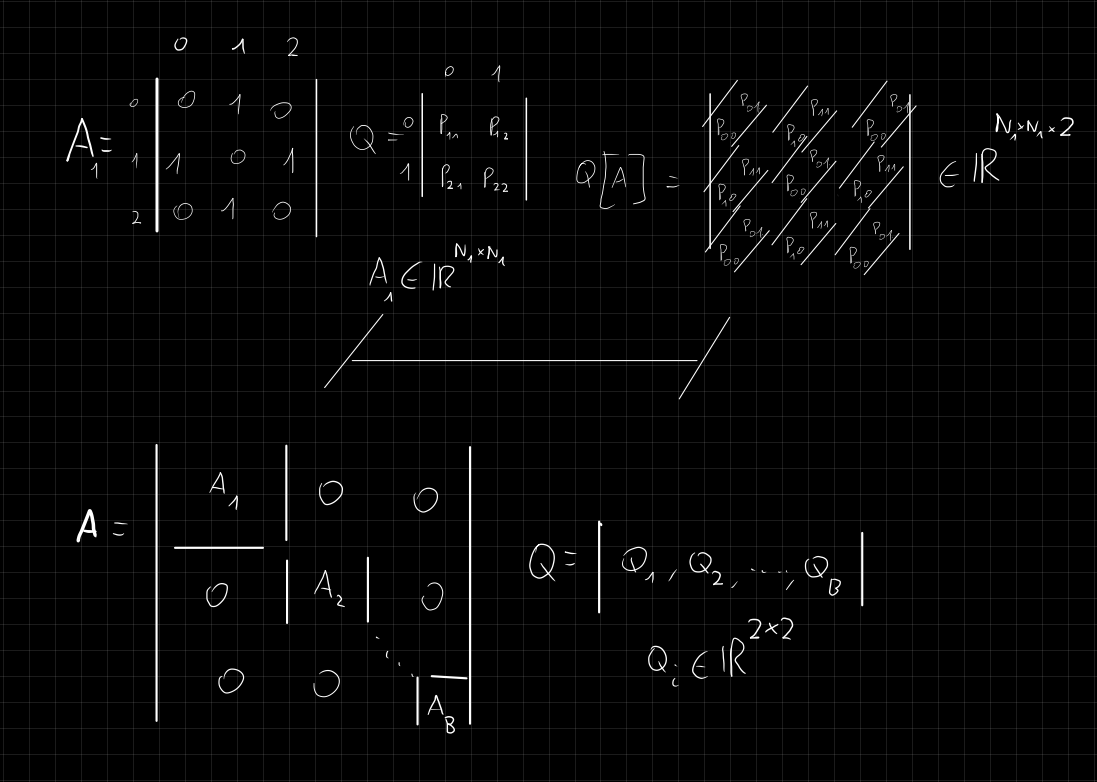

This answer is assuming that your matrix is square and the size of the patches are also square.

But I think the best option is to do it with a for loop (maybe there is a better option but idk)

A_n = torch.randint(0, 2, (4, 3, 3))

A = torch.block_diag(A_n[0], A_n[1], A_n[2], A_n[3])

print(A)

Q = torch.rand(4, 2, 2)

size_patch = 3

QA = torch.zeros(*A.shape, 2)

for i, idx in enumerate(range(0, A.shape[0], size_patch)):

QA[idx:idx+size_patch, idx:idx+size_patch] = Q[i, A[idx:idx+size_patch, idx:idx+size_patch], :]

torch.set_printoptions(precision=2)

print(f"Probability that the values are 0:\n\n{QA[:,:,0]}\n\n")

print(f"Probability that the values are 1:\n\n{QA[:,:,1]}")

# Output:

tensor([[0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

[1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 1, 0, 1, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 1, 0, 1, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 1, 0, 1, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 1, 0, 1, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0]])

Probability that the values are 0:

tensor([[0.99, 0.61, 0.99, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00],

[0.61, 0.61, 0.99, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00],

[0.99, 0.99, 0.99, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00],

[0.00, 0.00, 0.00, 0.96, 0.25, 0.96, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00],

[0.00, 0.00, 0.00, 0.96, 0.25, 0.96, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00],

[0.00, 0.00, 0.00, 0.96, 0.25, 0.25, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00],

[0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.42, 0.42, 0.56, 0.00, 0.00, 0.00],

[0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.56, 0.42, 0.56, 0.00, 0.00, 0.00],

[0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.56, 0.42, 0.56, 0.00, 0.00, 0.00],

[0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.64, 0.64, 0.64],

[0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.64, 0.64, 0.64],

[0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.64, 0.96, 0.64]])

Probability that the values are 1:

tensor([[0.19, 0.47, 0.19, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00],

[0.47, 0.47, 0.19, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00],

[0.19, 0.19, 0.19, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00],

[0.00, 0.00, 0.00, 0.29, 0.39, 0.29, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00],

[0.00, 0.00, 0.00, 0.29, 0.39, 0.29, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00],

[0.00, 0.00, 0.00, 0.29, 0.39, 0.39, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00],

[0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.98, 0.98, 0.29, 0.00, 0.00, 0.00],

[0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.29, 0.98, 0.29, 0.00, 0.00, 0.00],

[0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.29, 0.98, 0.29, 0.00, 0.00, 0.00],

[0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.25, 0.25, 0.25],

[0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.25, 0.25, 0.25],

[0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.25, 0.74, 0.25]])