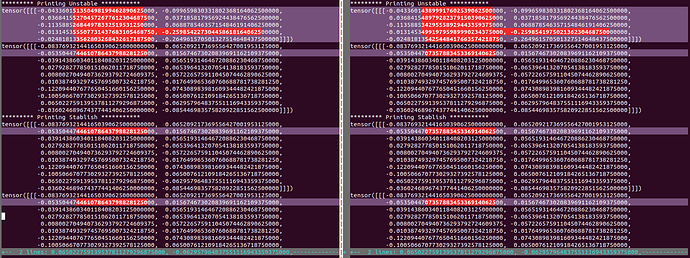

Has any anyone observed differences in CPU calculations on different processors with Torch 1.4.0? Is there a way to fix this? I ran the same code in the same environment (a docker container) on two different machines and got slightly different results on each. The image below shows the differences.

Machine one has Intel(R) Core™ i7-7800X CPU @ 3.50GHz

Machine two has Intel(R) Xeon(R) CPU E5-2670 0 @ 2.60GHz

With recursive calculations these differences become large enough to change the predictions from my model.

The code below produces the results shown.

#!/usr/bin/env python

import torch

import torch.nn as nn

import torch.nn.functional as F

torch.manual_seed(1)

### No affect

torch.backends.cudnn.deterministic = True

torch.backends.cudnn.benchmark = False

torch.backends.cudnn.enabled = False

torch.set_printoptions(precision=30)

class TestNet(nn.Module):

def __init__(self, input_dim, hidden_size, depth, observation_dim, dropout=0):

super(TestNet, self).__init__()

self.hidden_size = hidden_size

if depth >= 2:

self.gru = nn.GRU(input_dim, hidden_size, depth, dropout=dropout)

else:

self.gru = nn.GRU(input_dim, hidden_size, depth)

self.linear_mean1 = nn.Linear(hidden_size, hidden_size)

self.linear_mean2 = nn.Linear(hidden_size, observation_dim)

def forward(self, input_seq, hidden=None):

output_seq, hidden = self.gru(input_seq, hidden)

if good:

mean = output_seq

else:

mean = self.linear_mean2(F.relu(self.linear_mean1(output_seq))) # BAD

return mean, hidden

input_dim = 20

hidden_dim = 20

proj_dim = 10

hidden = torch.zeros(1,1,hidden_dim)

hidden_in = torch.zeros(1,1,hidden_dim)

in_tensor = torch.zeros(input_dim)

rnn_thing = TestNet(input_dim, hidden_dim, 1, proj_dim, 0)

rnn_thing.eval()

out, hidden = rnn_thing(in_tensor.view(1,1,-1), hidden=hidden_in, good=False)

print('********* Printing Unstable ***********')

print(out.data)

print(hidden.data)

print('********* Printing Stablish ***********')

out, hidden = rnn_thing(in_tensor.view(1,1,-1), hidden=hidden_in, good=True)

print(out.data)

print(hidden.data)