Problem

Hi,

I converted Pytorch model to ONNX model.

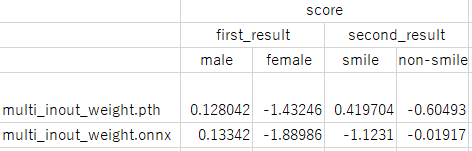

However, output is different between two models like below.

inference environment

Pytorch

・python 3.7.11

・pytorch 1.6.0

・torchvision 0.7.0

・cuda tool kit 10.1

・numpy 1.21.5

・pillow 8.4.0

ONNX

・onnxruntime-win-x64-gpu-1.4.0

・Visual studio 2017

・Cuda compilation tools, release 10.1, V10.1.243

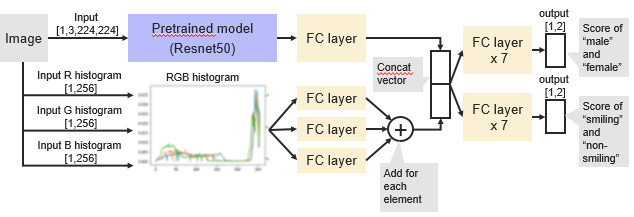

Network of model

detai information of our model

Script for converting model from Pytorch to ONNX

import torch

import torch.onnx as onnx

import torchvision.models as models

import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self, pretrained):

# スーパークラス(Module クラス)の初期化メソッドを実行

super().__init__()

self.pretrained = pretrained

self.pretrained.fc = nn.Linear(2048, 128)

self.fc1 = nn.Linear(256, 128)

self.fc2 = nn.Linear(256, 128)

self.fc3 = nn.Linear(256, 128)

self.fc_y1 = nn.Linear(128*2, 64)

self.fc_y1_1 = nn.Linear(64, 32)

self.fc_y1_2 = nn.Linear(32, 16)

self.fc_y1_3 = nn.Linear(16, 32)

self.fc_y1_4 = nn.Linear(32, 16)

self.fc_y1_5 = nn.Linear(16, 8)

self.fc_y1_6 = nn.Linear(8, 2)

self.fc_y2 = nn.Linear(128*2, 64)

self.fc_y2_1 = nn.Linear(64, 32)

self.fc_y2_2 = nn.Linear(32, 16)

self.fc_y2_3 = nn.Linear(16, 32)

self.fc_y2_4 = nn.Linear(32, 16)

self.fc_y2_5 = nn.Linear(16, 8)

self.fc_y2_6 = nn.Linear(8, 2)

self.bn_256 = nn.BatchNorm1d(num_features = 256)

self.bn_128 = nn.BatchNorm1d(num_features = 128)

self.bn_64 = nn.BatchNorm1d(num_features = 64)

self.bn_32 = nn.BatchNorm1d(num_features = 32)

self.bn_16 = nn.BatchNorm1d(num_features = 16)

self.bn_8 = nn.BatchNorm1d(num_features = 8)

def forward(self, x0, x1, x2, x3): # 入力から出力を計算するメソッドを定義

x0 = self.pretrained(x0)

x1 = F.relu(self.bn_128(self.fc1(x1)))

x2 = F.relu(self.bn_128(self.fc2(x2)))

x3 = F.relu(self.bn_128(self.fc3(x3)))

x123 = x1 + x2 + x3

x = torch.cat((x0, x123), 1)

y0 = F.relu(self.bn_64(self.fc_y1(x)))

y0 = F.relu(self.bn_32(self.fc_y1_1(y0)))

y0 = F.relu(self.bn_16(self.fc_y1_2(y0)))

y0 = F.relu(self.bn_32(self.fc_y1_3(y0)))

y0 = F.relu(self.bn_16(self.fc_y1_4(y0)))

y0 = F.relu(self.bn_8(self.fc_y1_5(y0)))

y0 = self.fc_y1_6(y0)

y1 = F.relu(self.bn_64(self.fc_y2(x)))

y1 = F.relu(self.bn_32(self.fc_y2_1(y1)))

y1 = F.relu(self.bn_16(self.fc_y2_2(y1)))

y1 = F.relu(self.bn_32(self.fc_y2_3(y1)))

y1 = F.relu(self.bn_16(self.fc_y2_4(y1)))

y1 = F.relu(self.bn_8(self.fc_y2_5(y1)))

y1 = self.fc_y2_6(y1)

return y0, y1

resnet = models.resnet50(pretrained=True)

model = Net(resnet)

model.load_state_dict(torch.load('path to Pytorch model'))

model.eval()

input_image = torch.randn(1,3,224,224, requires_grad=True)

input_hist_R = torch.randn(1,256, requires_grad=True)

input_hist_G = torch.randn(1,256, requires_grad=True)

input_hist_B = torch.randn(1,256, requires_grad=True)

input_tuple = (input_image,input_hist_R,input_hist_G,input_hist_B)

input_names = [ "input.1","input.154","input.156","input.158" ]

output_names = [ "593","612" ]

onnx.export(model, input_tuple, 'multi_inout.onnx',export_params=True,opset_version=12,do_constant_folding=True,input_names=input_names, output_names=output_names)

Environment for converting model

・python 3.7.11

・pytorch 1.6.0

・torchvision 0.7.0

・cuda tool kit 10.1

・numpy 1.21.5

・pillow 8.4.0

Is there something wrong with the way of the model conversion?

If necessary, I can share inference scripts and models.

Please help…