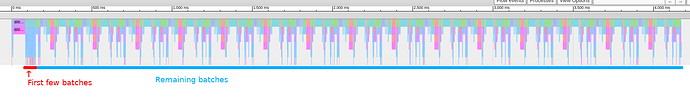

Hey y’all, I’m using PVCNN with custom weights to do semantic segmentation on point clouds. For the first 6 batches or so, inference occurs quickly - but thereafter the speed is reduced considerably. I did not trust measurements via time() from python, so I checked the profiler and sure enough the result is consistent with what I saw:

Frustratingly, enabling

use_cuda=True in the profiler makes it such that all batches infer at the same, slow pace; total inference time increases by seconds when CUDA profiling is enabled. My inference loop looks like so:

points = torch.from_numpy(my_pointclouds)

loader = DataLoader(points, batch_size=40, num_workers=0, pin_memory=True)

with torch.no_grad() and profiler.profile(record_shapes=True) as prof:

for batch in loader:

outputs = model(batch.to(configs.device, non_blocking=True))

del batch, outputs

prof.export_chrome_trace("trace.json")

The dataset should not be too large for my 8 GB GTX 1080, as it only takes up a few MB.

What could possibly be negatively affecting the inference speed of PVCNN? Why does profiling CUDA have such an impact? Thanks