I have noticed that, at inference time when using deeplabv3 model for image segmentation, doubling the batch size results in double the time for the inference (and viceversa).

I was supposing that, instead, until the GPU gets near saturation time will be constant for each batch, without depending on its size.

Here’s the reproducible code snippet that I am running on an ec2 istance (g4d.xlarge)

Pythorch version: 1.13.1

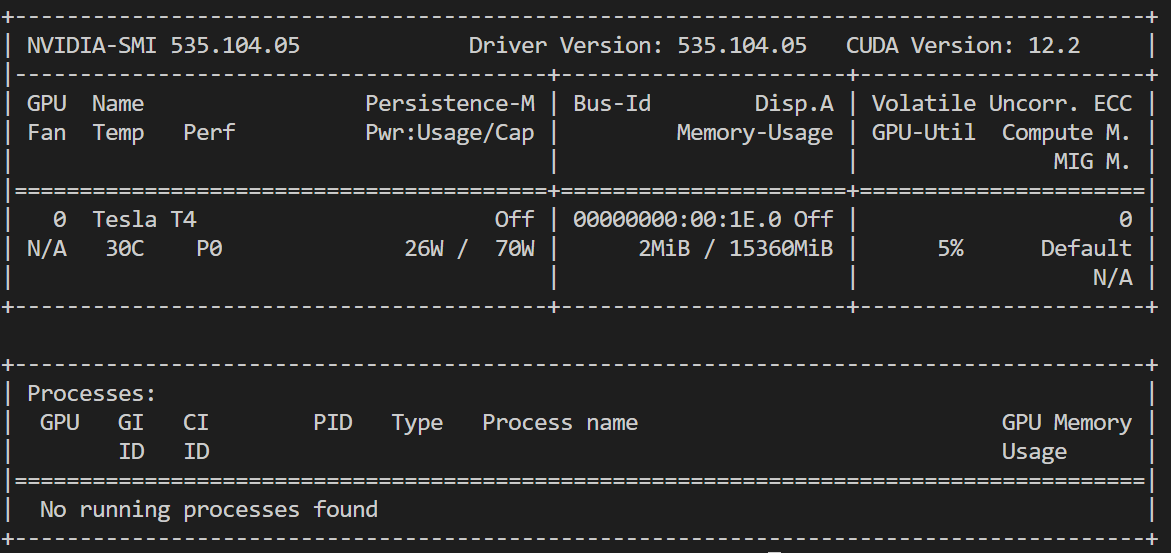

Cuda/GPU version and GPU memory on nvdia-smi picture below

import torch, time

model = torch.hub.load('pytorch/vision:v0.10.0', 'deeplabv3_resnet50', pretrained=True)

batch_size = 2

with torch.no_grad():

device = 'cuda'

dummy_input = torch.ones((batch_size, 3, 512, 512), dtype=torch.float32, device=device)

print(f'batch_size = {batch_size}')

print(f'dummy_input bytes: {dummy_input.storage().nbytes()}')

model.to(device)

model.eval()

for i in range(5):

# print(torch.cuda.memory_summary(abbreviated=True))

torch.cuda.synchronize()

start = time.time()

_ = model(dummy_input)

torch.cuda.synchronize()

end = time.time()

print(f'pass {i}: {end - start}')

I am aware of the GPU warm-up, so I am not considering the first iteration of the cycle.

These are the timings with respect to the various batch sizes

batch_size = 1

dummy_input bytes: 3145728

pass 0: 1.7911558151245117

pass 1: 0.09116387367248535

pass 2: 0.09141707420349121

pass 3: 0.09008550643920898

pass 4: 0.08789610862731934

-----------------------------------------

batch_size = 2

dummy_input bytes: 6291456

pass 0: 1.850174903869629

pass 1: 0.1694350242614746

pass 2: 0.16865038871765137

pass 3: 0.16701459884643555

pass 4: 0.1702117919921875

-----------------------------------------

batch_size = 4

dummy_input bytes: 12582912

pass 0: 2.0092501640319824

pass 1: 0.33000612258911133

pass 2: 0.3304569721221924

pass 3: 0.33622169494628906

pass 4: 0.3294081687927246

-----------------------------------------

batch_size = 8

dummy_input bytes: 25165824

pass 0: 2.2980120182037354

pass 1: 0.6141374111175537

pass 2: 0.6228690147399902

pass 3: 0.6222057342529297

pass 4: 0.6159627437591553

-----------------------------------------

batch_size = 16

dummy_input bytes: 50331648

pass 0: 2.885660409927368

pass 1: 1.2400434017181396

pass 2: 1.2422657012939453

pass 3: 1.2391083240509033

pass 4: 1.2441127300262451

-----------------------------------------

batch_size = 32

dummy_input bytes: 100663296

pass 0: 4.189502000808716

pass 1: 2.544891357421875

pass 2: 2.5518083572387695

pass 3: 2.5657997131347656

pass 4: 2.57613468170166

As we can see, double batch size = roughly double inference time. Which is not what I thought would happen.

Am I missing something? Is my way of timing incorrect?

I add also here the output of nvidia-smi before the execution

I also ran cuda memory summary for batch_size=32, nothing to me seems strange from memory side.

Here the snippet after last pass

|===========================================================================|

| PyTorch CUDA memory summary, device ID 0 |

|---------------------------------------------------------------------------|

| CUDA OOMs: 0 | cudaMalloc retries: 0 |

|===========================================================================|

| Metric | Cur Usage | Peak Usage | Tot Alloc | Tot Freed |

|---------------------------------------------------------------------------|

| Allocated memory | 1602 MB | 5414 MB | 171062 MB | 169459 MB |

|---------------------------------------------------------------------------|

| Active memory | 1602 MB | 5414 MB | 171062 MB | 169459 MB |

|---------------------------------------------------------------------------|

| GPU reserved memory | 10596 MB | 10596 MB | 10596 MB | 0 B |

|---------------------------------------------------------------------------|

| Non-releasable memory | 1165 MB | 1935 MB | 76126 MB | 74961 MB |

|---------------------------------------------------------------------------|

| Allocations | 373 | 382 | 1171 | 798 |

|---------------------------------------------------------------------------|

| Active allocs | 373 | 382 | 1171 | 798 |

|---------------------------------------------------------------------------|

| GPU reserved segments | 31 | 31 | 31 | 0 |

|---------------------------------------------------------------------------|

| Non-releasable allocs | 7 | 10 | 317 | 310 |

|---------------------------------------------------------------------------|

| Oversize allocations | 0 | 0 | 0 | 0 |

|---------------------------------------------------------------------------|

| Oversize GPU segments | 0 | 0 | 0 | 0 |

|===========================================================================|

Thanks for any help!