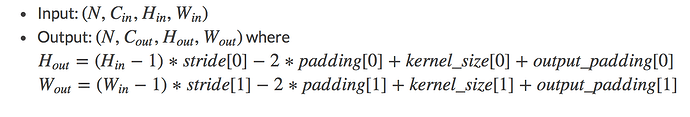

Is it possible to automatically infer the padding size required for nn.ConvTranspose2D such that we can specify a particular output shape that the layer will attempt to match at run-time? For example, TensorFlow has the tf.nn.conv2d_transpose function which allows the output_shape to be specified at run-time.

Basically, I’d like to upsample a 2D feature volume to match the size of an earlier feature volume to implement skip-layer connections. However, the dimensions of the initial input may not be a multiple of a power of 2, so when upsampling the downsampled feature volume, padding is required. Since the initial input’s dimensions may vary, the padding needs to be dynamically inferred.