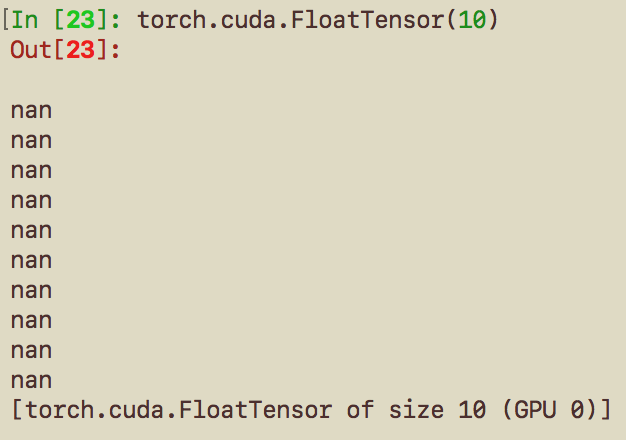

hi there,

I just init a new FloatTensor like

torch.cuda.FloatTensor(1),

but I receive nan.

This didn’t always happen, looks like a random event.

Did anyone meet same thing like this, it confused me for a long time.

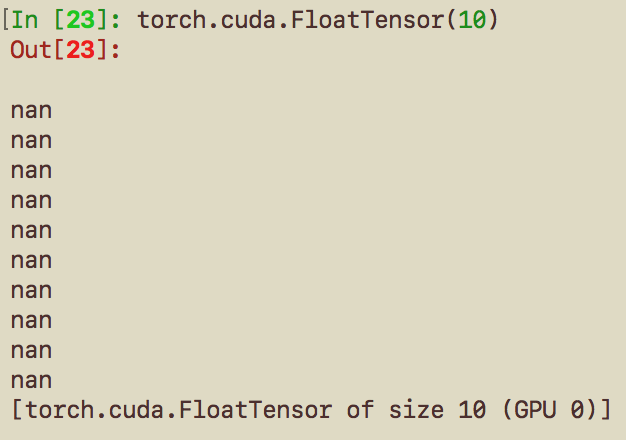

hi there,

I just init a new FloatTensor like

torch.cuda.FloatTensor(1),

but I receive nan.

This didn’t always happen, looks like a random event.

Did anyone meet same thing like this, it confused me for a long time.

You didn’t tell PyTorch what to put in the tensor, so it just allocated the memory space without altering the contents of that memory space.

To initialise a new FloatTensor full of zeros do this…

torch.cuda.FloatTensor(10).fill_(0)

To initialise a new FloatTensor full of random values uniformly chosen between 1.0 and 2.5 do this…

torch.cuda.FloatTensor(10).uniform_(1.0, 2.5)

Other options here