Hello!

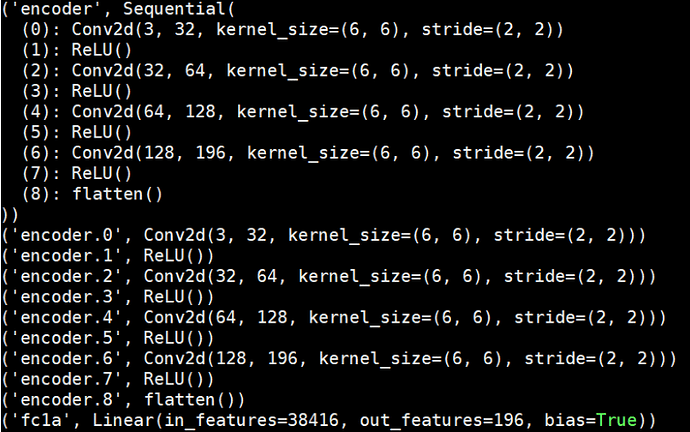

I’m working on an architecture that involves initialising some of the weights. I need to initialise most linear and conv2d layers (and there’s so many of them!) but not all of them.

I know we can say this to initialise the weights for all layers of a given type:

for m in self.modules():

if (isinstance(m, nn.Linear) or isinstance(m, nn.Conv2d)):

torch.nn.init.xavier_uniform_(m.weights)

elif isinstance(m, nn.BatchNorm2d):

(do something else)

How do I add “exceptions” - eg “initialise all conv layers with xavier except for the first 3”? Is it possible to say something like

if isinstance(m, nn.Linear) and m!=fc1:

torch.nn.init ....

? The linear layers appear on their own (self.fc1 = nn.Linear()) and the conv layers are part of nn.Sequential

Thanks!

NK