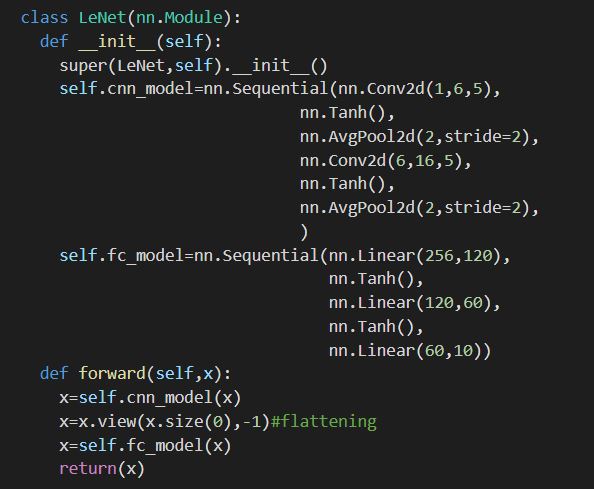

how to implement weight initializing techniques like xavier, He while using nn.sequential. for eg for the image given below

1 Like

You could define a method to initialize the parameters for each layer via e.g.:

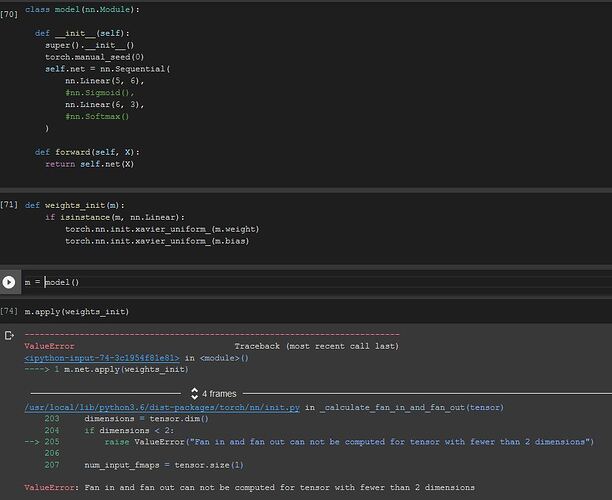

def weights_init(m):

if isinstance(m, nn.Conv2d):

torch.nn.init.xavier_uniform_(m.weight)

torch.nn.init.zero_(m.bias)

net.apply(weights_init)

Inside this method, you could add conditions for each layer and use the appropriate weight init method.

3 Likes

Sorry for the misleading code, but you cannot use xavier_uniform_ on 1-dimensional tensors, which would be the case for the bias parameter in the linear layers.

I’ve edited the previous example.

1 Like

How can this be done for a single layer? For example in my nn.Sequential if I want to initialise the weights of the first Conv2d to Xavier uniform and leave the other Conv2ds at their default values.

You could call the .apply method on this layer only. Here is a simple example:

def weights_init(m):

if isinstance(m, nn.Conv2d):

torch.nn.init.xavier_uniform_(m.weight)

if m.bias:

torch.nn.init.zeros_(m.bias)

model = models.resnet50()

model.layer4[1].conv2.apply(weights_init)