I trying to prune a custom built Lenet() Model. I first train model for 15 epochs on mnist data. Then I try to remove filters with minimum L1 norm. I am doing this by copy the weights of filters I want to retain in a list then I create a new model of Lenet with less number of filters and then I try to copy trained weights into this new reduced model.

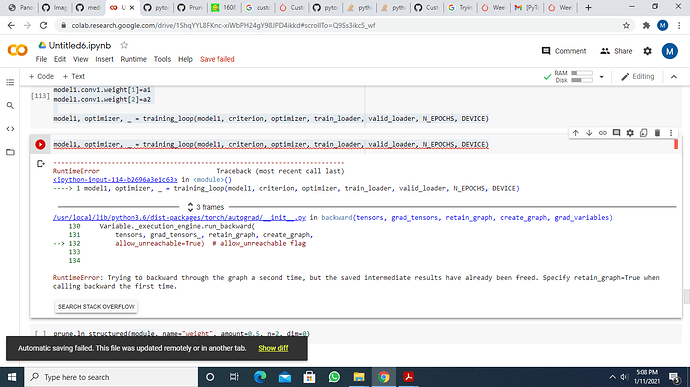

But I am into multiple errors like “Trying to backward through the graph a second time, but the saved intermediate results have already been freed. Specify retain_graph=True when calling backward the first time.”

Code i am trying is given below. I have also pasted screen shot of error. weights_idx is list that contains weights matrices for filters that i copied from trained model.

Please if some one can help. Thanks in advance.

class LeNet(nn.Module):

def __init__(self,layer1_outchannels=6,layer2_outchannels=16):

super(LeNet, self).__init__()

# 1 input image channel, 6 output channels, 3x3 square conv kernel

self.conv1 = nn.Conv2d(1, layer1_outchannels, 5)

self.conv2 = nn.Conv2d(layer1_outchannels, layer2_outchannels, 5)

self.fc1 = nn.Linear(layer2_outchannels * 5 * 5, 120) # 5x5 image dimension

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = F.max_pool2d(F.relu(self.conv1(x)), (2, 2))

x = F.max_pool2d(F.relu(self.conv2(x)), 2)

x = x.view(-1, int(x.nelement() / x.shape[0]))

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

logits = self.fc3(x)

probs = F.softmax(logits, dim=1)

return logits, probs

model1 = LeNet(3,16).to(DEVICE)

a=torch.tensor(weights_idx[0]).requires_grad_(True)

a1=torch.tensor(weights_idx[1]).requires_grad_(True)

a2=torch.tensor(weights_idx[2]).requires_grad_(True)

model1.conv1.weight[0]=a

model1.conv1.weight[1]=a1

model1.conv1.weight[2]=a2

model1, optimizer, _ = training_loop(model1, criterion, optimizer, train_loader, valid_loader, N_EPOCHS, DEVICE)