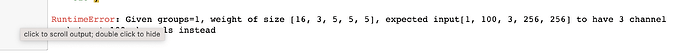

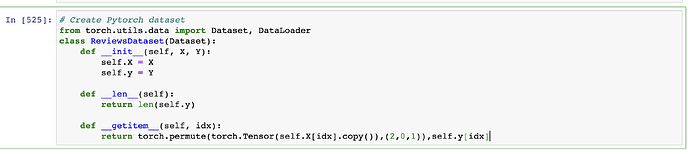

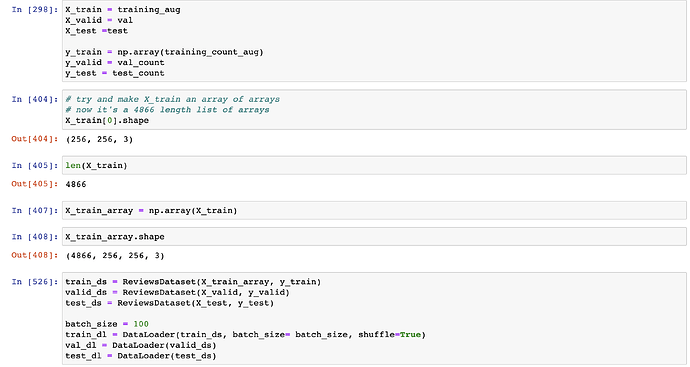

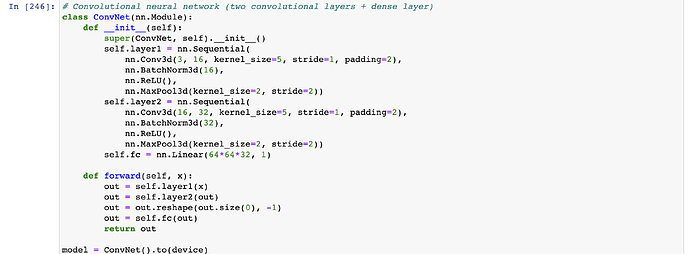

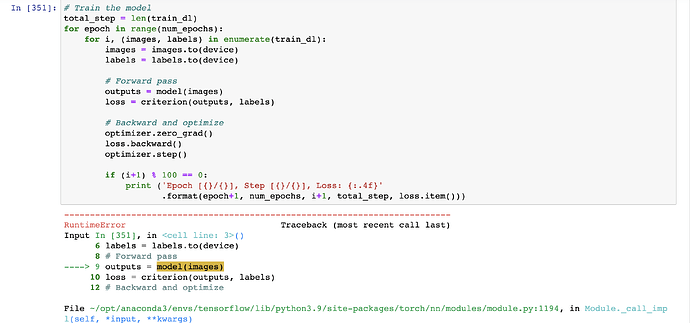

The aim is to create a CNN in pytorch for RGB(256x256) images. I am performing a regression task. The images are in the form of numpy arrays. I have made a custom Pytorch dataset using pytorch.utilis.data.Dataset to take in the images and the target values. The dataset is loaded in batches of size 100 using DataLoader. So the inputs should of the form [100,3,256,256]. But the error says the input is of the form [1,100,3,256,256]. I don’t know where I have gone wrong.

Calling torch.squeeze on images should workaround the issue but I recommend printing the shape during each step of dataloading to see where the extra dimension is added.