Hi @ptrblck. Could you help me with these issue?

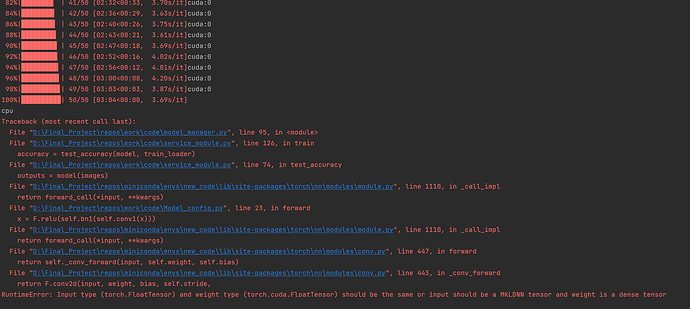

I’ve moved data, model and loss func to cuda, but it seems the input tensor is not switching to cuda

The model is a CNN with a embedded quantum circuit.

RuntimeError : Input type (torch.FloatTensor) and weight type (torch.cuda.FloatTensor) should be the same or input should be a MKLDNN tensor and weight is a dense tensor

train_data = torchvision.datasets.ImageFolder(path_to_train', transform=transforms.Compose([transforms.ToTensor()]))

test_data = torchvision.datasets.ImageFolder('path_to_test', transform=transforms.Compose([transforms.ToTensor()]))

train_loader = DataLoader(train_data, shuffle=True, batch_size=1)

test_loader = DataLoader(test_data, shuffle=True, batch_size=1)

for data, target in train_loader:

data = data.to(device)

target = target.to(device)

for data, target in test_loader:

data = data.to(device)

target = target.to(device)

class Net(Module):

def __init__(self, qnn):

super().__init__()

self.conv1 = Conv2d(3, 1, kernel_size=5)

self.conv2 = Conv2d(1, 1, kernel_size=5)

self.dropout = Dropout2d()

self.fc1 = Linear(3844, 64)

self.fc2 = Linear(64, 2) # 2-dimensional input to QNN

self.qnn = TorchConnector(qnn) # Apply torch connector, weights chosen

# uniformly at random from interval [-1,1].

self.fc3 = Linear(1, 1) # 1-dimensional output from QNN

def forward(self, x):

x = F.relu(self.conv1(x))

x = F.max_pool2d(x, 2)

x = F.relu(self.conv2(x))

x = F.max_pool2d(x, 2)

x = self.dropout(x)

x = x.view(x.shape[0], -1))

x = F.relu(self.fc1(x))

x = self.fc2(x)

x = self.qnn(x) # apply QNN

x = self.fc3(x)

return cat((x, 1 - x), -1)

model = Net(qnn)

model = model.to('cuda')

# Define model, optimizer, and loss function

optimizer = optim.Adam(model.parameters(), lr=0.001)

loss_func = NLLLoss().to('cuda')

# Start training

epochs = 10 # Set number of epochs

loss_list = [] # Store loss history

model.train() # Set model to training mode

for epoch in range(epochs):

total_loss = []

for batch_idx, (data, target) in enumerate(train_loader):

optimizer.zero_grad(set_to_none=True) # Initialize gradient

print(data.type, target.type)

output = model(data) # Forward pass

loss = loss_func(output, target) # Calculate loss

loss.backward() # Backward pass

optimizer.step() # Optimize weights

total_loss.append(loss.item()) # Store loss

loss_list.append(sum(total_loss) / len(total_loss))

print("Training [{:.0f}%]\tLoss: {:.4f}".format(100.0 * (epoch + 1) / epochs, loss_list[-1]))