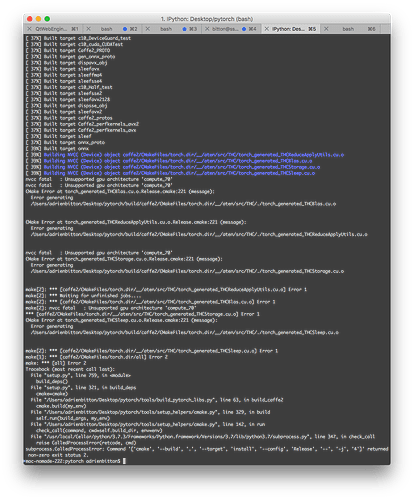

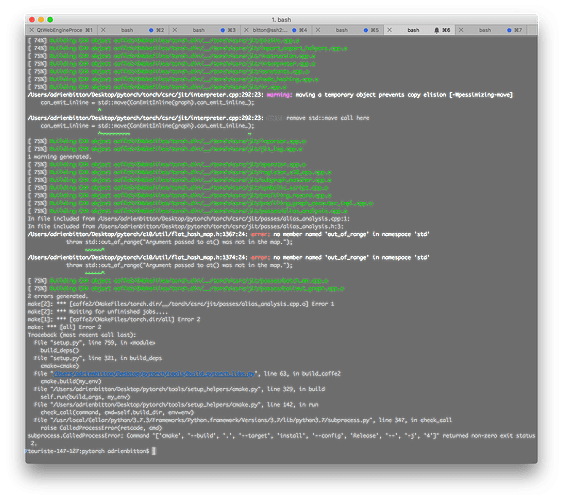

Now that I re-installed dependencies and re-cloned pytorch source.

Trying compiling from scratch leads to other errors …

It doesn’t start the build % but first goes with

Building CXX object caffe2/CMakeFiles

and it stops at [1970/3136]

I don’t know why is this new step happening and why it is failing … any hint or known workaround ? Only thing I found could be setting BUILD_SHARED_LIBS=OFF or USE_NINJA=OFF ; I am trying it now …

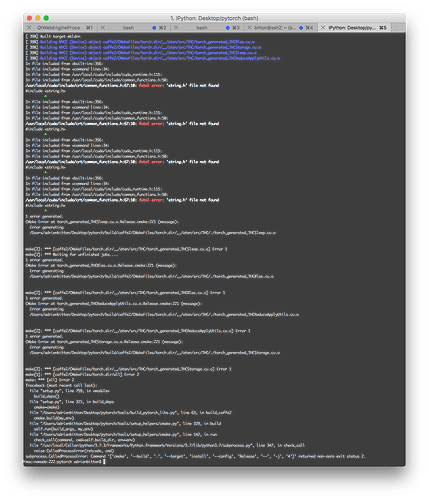

[1909/3136] Building CXX object caffe2/CMakeFiles/torch.dir/sgd/learning_rate_op.cc.o

In file included from ../caffe2/sgd/learning_rate_op.cc:1:

In file included from ../caffe2/sgd/learning_rate_op.h:6:

In file included from ../caffe2/core/context.h:9:

In file included from ../caffe2/core/allocator.h:3:

In file included from ../c10/core/CPUAllocator.h:7:

../c10/util/Logging.h:191:29: warning: comparison of integers of different signs: 'const unsigned long' and 'const int' [-Wsign-compare]

BINARY_COMP_HELPER(Greater, >)

~~~~~~~~~~~~~~~~~~~~~~~~~~~~^~

../c10/util/Logging.h:184:11: note: expanded from macro 'BINARY_COMP_HELPER'

if (x op y) { \

~ ^ ~

../caffe2/sgd/learning_rate_op.h:143:7: note: in instantiation of function template specialization 'c10::enforce_detail::Greater<unsigned long, int>' requested here

CAFFE_ENFORCE_GT(

^

../c10/util/Logging.h:242:27: note: expanded from macro 'CAFFE_ENFORCE_GT'

CAFFE_ENFORCE_THAT_IMPL(Greater((x), (y)), #x " > " #y, __VA_ARGS__)

^

../caffe2/sgd/learning_rate_op.h:23:20: note: in instantiation of member function 'caffe2::LearningRateOp<float, caffe2::CPUContext>::createLearningRateFunctor' requested here

functor_.reset(createLearningRateFunctor(policy));

^

../c10/util/Registry.h:184:30: note: in instantiation of member function 'caffe2::LearningRateOp<float, caffe2::CPUContext>::LearningRateOp' requested here

return ObjectPtrType(new DerivedType(args...));

^

../caffe2/sgd/learning_rate_op.cc:4:1: note: in instantiation of function template specialization 'c10::Registerer<std::__1::basic_string<char>, std::__1::unique_ptr<caffe2::OperatorBase, std::__1::default_delete<caffe2::OperatorBase> >, const caffe2::OperatorDef &, caffe2::Workspace *>::DefaultCreator<caffe2::LearningRateOp<float, caffe2::CPUContext> >' requested here

REGISTER_CPU_OPERATOR(LearningRate, LearningRateOp<float, CPUContext>);

^

../caffe2/core/operator.h:1396:3: note: expanded from macro 'REGISTER_CPU_OPERATOR'

C10_REGISTER_CLASS(CPUOperatorRegistry, name, __VA_ARGS__)

^

../c10/util/Registry.h:279:3: note: expanded from macro 'C10_REGISTER_CLASS'

C10_REGISTER_TYPED_CLASS(RegistryName, #key, __VA_ARGS__)

^

../c10/util/Registry.h:238:33: note: expanded from macro 'C10_REGISTER_TYPED_CLASS'

Registerer##RegistryName::DefaultCreator<__VA_ARGS__>, \

^

In file included from ../caffe2/sgd/learning_rate_op.cc:1:

In file included from ../caffe2/sgd/learning_rate_op.h:8:

../caffe2/sgd/learning_rate_functors.h:279:11: warning: using integer absolute value function 'abs' when argument is of floating point type [-Wabsolute-value]

T x = abs(static_cast<T>(iter) / stepsize_ - 2 * cycle + 1);

^

../caffe2/sgd/learning_rate_functors.h:268:3: note: in instantiation of member function 'caffe2::CyclicalLearningRate<float>::operator()' requested here

CyclicalLearningRate(

^

../caffe2/sgd/learning_rate_op.h:179:18: note: in instantiation of member function 'caffe2::CyclicalLearningRate<float>::CyclicalLearningRate' requested here

return new CyclicalLearningRate<T>(base_lr_, max_lr, stepsize, decay);

^

../caffe2/sgd/learning_rate_op.h:23:20: note: in instantiation of member function 'caffe2::LearningRateOp<float, caffe2::CPUContext>::createLearningRateFunctor' requested here

functor_.reset(createLearningRateFunctor(policy));

^

../c10/util/Registry.h:184:30: note: in instantiation of member function 'caffe2::LearningRateOp<float, caffe2::CPUContext>::LearningRateOp' requested here

return ObjectPtrType(new DerivedType(args...));

^

../caffe2/sgd/learning_rate_op.cc:4:1: note: in instantiation of function template specialization 'c10::Registerer<std::__1::basic_string<char>, std::__1::unique_ptr<caffe2::OperatorBase, std::__1::default_delete<caffe2::OperatorBase> >, const caffe2::OperatorDef &, caffe2::Workspace *>::DefaultCreator<caffe2::LearningRateOp<float, caffe2::CPUContext> >' requested here

REGISTER_CPU_OPERATOR(LearningRate, LearningRateOp<float, CPUContext>);

^

../caffe2/core/operator.h:1396:3: note: expanded from macro 'REGISTER_CPU_OPERATOR'

C10_REGISTER_CLASS(CPUOperatorRegistry, name, __VA_ARGS__)

^

../c10/util/Registry.h:279:3: note: expanded from macro 'C10_REGISTER_CLASS'

C10_REGISTER_TYPED_CLASS(RegistryName, #key, __VA_ARGS__)

^

../c10/util/Registry.h:238:33: note: expanded from macro 'C10_REGISTER_TYPED_CLASS'

Registerer##RegistryName::DefaultCreator<__VA_ARGS__>, \

^

../caffe2/sgd/learning_rate_functors.h:279:11: note: use function 'std::abs' instead

T x = abs(static_cast<T>(iter) / stepsize_ - 2 * cycle + 1);

^~~

std::abs

../caffe2/sgd/learning_rate_functors.h:281:12: warning: using integer absolute value function 'abs' when argument is of floating point type [-Wabsolute-value]

(T(abs(max_lr_)) / T(abs(base_lr_)) - 1) * std::max(T(0.0), (1 - x)) *

^

../caffe2/sgd/learning_rate_functors.h:281:12: note: use function 'std::abs' instead

(T(abs(max_lr_)) / T(abs(base_lr_)) - 1) * std::max(T(0.0), (1 - x)) *

^~~

std::abs

../caffe2/sgd/learning_rate_functors.h:281:30: warning: using integer absolute value function 'abs' when argument is of floating point type [-Wabsolute-value]

(T(abs(max_lr_)) / T(abs(base_lr_)) - 1) * std::max(T(0.0), (1 - x)) *

^

../caffe2/sgd/learning_rate_functors.h:281:30: note: use function 'std::abs' instead

(T(abs(max_lr_)) / T(abs(base_lr_)) - 1) * std::max(T(0.0), (1 - x)) *

^~~

std::abs

4 warnings generated.

[1914/3136] Building CXX object caffe2/CMakeFiles/torch.dir/share/contrib/nnpack/conv_op.cc.o

../caffe2/share/contrib/nnpack/conv_op.cc:183:16: warning: unused variable 'output_channels' [-Wunused-variable]

const size_t output_channels = Y->dim32(1);

^

../caffe2/share/contrib/nnpack/conv_op.cc:181:16: warning: unused variable 'batch_size' [-Wunused-variable]

const size_t batch_size = X.dim32(0);

^

../caffe2/share/contrib/nnpack/conv_op.cc:155:13: warning: unused variable 'N' [-Wunused-variable]

const int N = X.dim32(0), C = X.dim32(1), H = X.dim32(2), W = X.dim32(3);

^

../caffe2/share/contrib/nnpack/conv_op.cc:182:16: warning: unused variable 'input_channels' [-Wunused-variable]

const size_t input_channels = X.dim32(1);

^

../caffe2/share/contrib/nnpack/conv_op.cc:175:27: warning: comparison of integers of different signs: 'size_type' (aka 'unsigned long') and 'const int' [-Wsign-compare]

if (dummyBias_.size() != M) {

~~~~~~~~~~~~~~~~~ ^ ~

In file included from ../caffe2/share/contrib/nnpack/conv_op.cc:7:

In file included from ../caffe2/core/context.h:9:

In file included from ../caffe2/core/allocator.h:3:

In file included from ../c10/core/CPUAllocator.h:7:

../c10/util/Logging.h:189:28: warning: comparison of integers of different signs: 'const unsigned long' and 'const int' [-Wsign-compare]

BINARY_COMP_HELPER(Equals, ==)

~~~~~~~~~~~~~~~~~~~~~~~~~~~^~~

../c10/util/Logging.h:184:11: note: expanded from macro 'BINARY_COMP_HELPER'

if (x op y) { \

~ ^ ~

../caffe2/share/contrib/nnpack/conv_op.cc:274:11: note: in instantiation of function template specialization 'c10::enforce_detail::Equals<unsigned long, int>' requested here

CAFFE_ENFORCE_EQ(transformedFilters_.size(), group_);

^

../c10/util/Logging.h:232:27: note: expanded from macro 'CAFFE_ENFORCE_EQ'

CAFFE_ENFORCE_THAT_IMPL(Equals((x), (y)), #x " == " #y, __VA_ARGS__)

^

6 warnings generated.

[1915/3136] Building CXX object caffe2/CMakeFiles/torch.dir/transforms/common_subexpression_elimination.cc.o

../caffe2/transforms/common_subexpression_elimination.cc:104:23: warning: comparison of integers of different signs: 'int' and 'size_type' (aka 'unsigned long') [-Wsign-compare]

for (int i = 0; i < subgraph.size(); i++) {

~ ^ ~~~~~~~~~~~~~~~

1 warning generated.

[1918/3136] Building CXX object caffe2/CMakeFiles/torch.dir/transforms/pattern_net_transform.cc.o

../caffe2/transforms/pattern_net_transform.cc:117:30: warning: comparison of integers of different signs: 'value_type' (aka 'int') and 'size_type' (aka 'unsigned long') [-Wsign-compare]

if (inverse_ops_[parent] < subgraph.size() &&

~~~~~~~~~~~~~~~~~~~~ ^ ~~~~~~~~~~~~~~~

../caffe2/transforms/pattern_net_transform.cc:125:29: warning: comparison of integers of different signs: 'value_type' (aka 'int') and 'size_type' (aka 'unsigned long') [-Wsign-compare]

if (inverse_ops_[child] < subgraph.size() &&

~~~~~~~~~~~~~~~~~~~ ^ ~~~~~~~~~~~~~~~

../caffe2/transforms/pattern_net_transform.cc:153:21: warning: comparison of integers of different signs: 'int' and 'size_type' (aka 'unsigned long') [-Wsign-compare]

for (int i = 0; i < match.size(); i++) {

~ ^ ~~~~~~~~~~~~

../caffe2/transforms/pattern_net_transform.cc:182:21: warning: comparison of integers of different signs: 'int' and 'size_t' (aka 'unsigned long') [-Wsign-compare]

for (int i = 0; i < r_.size(); i++) {

~ ^ ~~~~~~~~~

4 warnings generated.

[1920/3136] Building CXX object caffe2/CMakeFiles/torch.dir/__/torch/csrc/autograd/generated/Functions.cpp.o

../torch/csrc/autograd/generated/Functions.cpp:401:8: warning: unused function 'cumprod_backward' [-Wunused-function]

Tensor cumprod_backward(const Tensor &grad, const Tensor &input, int64_t dim, optional<ScalarType> dtype) {

^

1 warning generated.

[1941/3136] Building CXX object caffe2/CMakeFiles/torch.dir/__/torch/csrc/autograd/profiler.cpp.o

../torch/csrc/autograd/profiler.cpp:72:26: warning: comparison of integers of different signs: 'int' and 'size_type' (aka 'unsigned long') [-Wsign-compare]

for(int i = 0; i < shapes.size(); i++) {

~ ^ ~~~~~~~~~~~~~

../torch/csrc/autograd/profiler.cpp:75:34: warning: comparison of integers of different signs: 'int' and 'size_type' (aka 'unsigned long') [-Wsign-compare]

for(int dim = 0; dim < shapes[i].size(); dim++) {

~~~ ^ ~~~~~~~~~~~~~~~~

../torch/csrc/autograd/profiler.cpp:77:22: warning: comparison of integers of different signs: 'int' and 'unsigned long' [-Wsign-compare]

if(dim < shapes[i].size() - 1)

~~~ ^ ~~~~~~~~~~~~~~~~~~~~

../torch/csrc/autograd/profiler.cpp:84:16: warning: comparison of integers of different signs: 'int' and 'unsigned long' [-Wsign-compare]

if(i < shapes.size() - 1)

~ ^ ~~~~~~~~~~~~~~~~~

4 warnings generated.

[1958/3136] Building CXX object caffe2/CMakeFiles/torch.dir/__/torch/csrc/jit/interpreter.cpp.o

../torch/csrc/jit/interpreter.cpp:292:23: warning: moving a temporary object prevents copy elision [-Wpessimizing-move]

can_emit_inline = std::move(CanEmitInline(graph).can_emit_inline_);

^

../torch/csrc/jit/interpreter.cpp:292:23: note: remove std::move call here

can_emit_inline = std::move(CanEmitInline(graph).can_emit_inline_);

^~~~~~~~~~ ~

1 warning generated.

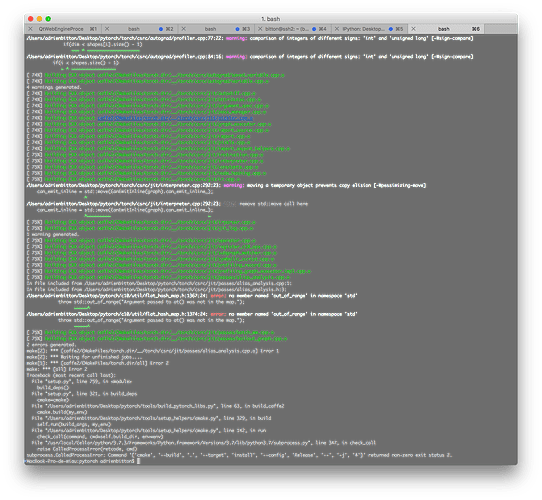

[1967/3136] Building CXX object caffe2/CMakeFiles/torch.dir/__/torch/csrc/jit/passes/alias_analysis.cpp.o

FAILED: caffe2/CMakeFiles/torch.dir/__/torch/csrc/jit/passes/alias_analysis.cpp.o

/Applications/Xcode.app/Contents/Developer/Toolchains/XcodeDefault.xctoolchain/usr/bin/clang++ -DAT_PARALLEL_OPENMP=1 -DCAFFE2_BUILD_MAIN_LIB -DCPUINFO_SUPPORTED_PLATFORM=1 -DHAVE_MMAP=1 -DHAVE_SHM_OPEN=1 -DHAVE_SHM_UNLINK=1 -DIDEEP_USE_MKL -DNNP_CONVOLUTION_ONLY=0 -DNNP_INFERENCE_ONLY=0 -DONNX_ML=1 -DONNX_NAMESPACE=onnx_torch -DTH_BLAS_MKL -DUSE_CUDA -D_FILE_OFFSET_BITS=64 -D_THP_CORE -Dtorch_EXPORTS -Iaten/src -I../aten/src -I. -I../ -I../cmake/../third_party/benchmark/include -Icaffe2/contrib/aten -I../third_party/onnx -Ithird_party/onnx -I../third_party/foxi -Ithird_party/foxi -I../caffe2/../torch/csrc/api -I../caffe2/../torch/csrc/api/include -I../caffe2/aten/src/TH -Icaffe2/aten/src/TH -I../caffe2/../torch/../aten/src -Icaffe2/aten/src -Icaffe2/../aten/src -Icaffe2/../aten/src/ATen -I../caffe2/../torch/csrc -I../caffe2/../torch/../third_party/miniz-2.0.8 -I../aten/src/TH -I../aten/../third_party/catch/single_include -I../aten/src/ATen/.. -Icaffe2/aten/src/ATen -I../third_party/miniz-2.0.8 -I../caffe2/core/nomnigraph/include -Icaffe2/aten/src/THC -I../aten/src/THC -I../aten/src/THCUNN -I../aten/src/ATen/cuda -I../c10/.. -Ithird_party/ideep/mkl-dnn/include -I../third_party/ideep/mkl-dnn/src/../include -I../third_party/QNNPACK/include -I../third_party/pthreadpool/include -I../aten/src/ATen/native/quantized/cpu/qnnpack/include -I../aten/src/ATen/native/quantized/cpu/qnnpack/src -I../third_party/QNNPACK/deps/clog/include -I../third_party/NNPACK/include -I../third_party/cpuinfo/include -I../third_party/FP16/include -I../c10/cuda/../.. -isystem ../cmake/../third_party/googletest/googlemock/include -isystem ../cmake/../third_party/googletest/googletest/include -isystem ../third_party/protobuf/src -isystem /usr/local/include -isystem ../third_party/gemmlowp -isystem ../third_party/neon2sse -isystem ../cmake/../third_party/eigen -isystem /usr/local/Cellar/python/3.7.3/Frameworks/Python.framework/Versions/3.7/include/python3.7m -isystem /usr/local/lib/python3.7/site-packages/numpy/core/include -isystem ../cmake/../third_party/pybind11/include -isystem /opt/rocm/hip/include -isystem /include -isystem ../cmake/../third_party/cub -isystem /usr/local/cuda/include -isystem ../third_party/ideep/mkl-dnn/include -isystem ../third_party/ideep/include -isystem include -Wno-deprecated -fvisibility-inlines-hidden -Wno-deprecated-declarations -Xpreprocessor -fopenmp -I/usr/local/include -DUSE_QNNPACK -DUSE_PYTORCH_QNNPACK -O2 -fPIC -Wno-narrowing -Wall -Wextra -Wno-missing-field-initializers -Wno-type-limits -Wno-array-bounds -Wno-unknown-pragmas -Wno-sign-compare -Wno-unused-parameter -Wno-unused-variable -Wno-unused-function -Wno-unused-result -Wno-strict-overflow -Wno-strict-aliasing -Wno-error=deprecated-declarations -Wno-error=pedantic -Wno-error=redundant-decls -Wno-error=old-style-cast -Wno-invalid-partial-specialization -Wno-typedef-redefinition -Wno-unknown-warning-option -Wno-unused-private-field -Wno-inconsistent-missing-override -Wno-aligned-allocation-unavailable -Wno-c++14-extensions -Wno-constexpr-not-const -Wno-missing-braces -Qunused-arguments -fcolor-diagnostics -fno-math-errno -fno-trapping-math -Wno-unused-private-field -Wno-missing-braces -Wno-c++14-extensions -Wno-constexpr-not-const -DHAVE_AVX_CPU_DEFINITION -DHAVE_AVX2_CPU_DEFINITION -O3 -isysroot /Applications/Xcode.app/Contents/Developer/Platforms/MacOSX.platform/Developer/SDKs/MacOSX10.12.sdk -mmacosx-version-min=10.9 -fPIC -DCUDA_HAS_FP16=1 -DHAVE_GCC_GET_CPUID -DUSE_AVX -DUSE_AVX2 -DTH_HAVE_THREAD -Wall -Wextra -Wno-unused-parameter -Wno-missing-field-initializers -Wno-write-strings -Wno-unknown-pragmas -Wno-missing-braces -fvisibility=hidden -DCAFFE2_BUILD_MAIN_LIB -O2 -std=gnu++11 -MD -MT caffe2/CMakeFiles/torch.dir/__/torch/csrc/jit/passes/alias_analysis.cpp.o -MF caffe2/CMakeFiles/torch.dir/__/torch/csrc/jit/passes/alias_analysis.cpp.o.d -o caffe2/CMakeFiles/torch.dir/__/torch/csrc/jit/passes/alias_analysis.cpp.o -c ../torch/csrc/jit/passes/alias_analysis.cpp

In file included from ../torch/csrc/jit/passes/alias_analysis.cpp:1:

In file included from ../torch/csrc/jit/passes/alias_analysis.h:3:

../c10/util/flat_hash_map.h:1367:24: error: no member named 'out_of_range' in namespace 'std'

throw std::out_of_range("Argument passed to at() was not in the map.");

~~~~~^

../c10/util/flat_hash_map.h:1374:24: error: no member named 'out_of_range' in namespace 'std'

throw std::out_of_range("Argument passed to at() was not in the map.");

~~~~~^

2 errors generated.

[1970/3136] Building CXX object caffe2/CMakeFiles/torch.dir/__/torch/csrc/jit/passes/batch_mm.cpp.o

ninja: build stopped: subcommand failed.

Traceback (most recent call last):

File "setup.py", line 756, in <module>

build_deps()

File "setup.py", line 321, in build_deps

cmake=cmake)

File "/Users/adrienbitton/Desktop/pytorch/tools/build_pytorch_libs.py", line 63, in build_caffe2

cmake.build(my_env)

File "/Users/adrienbitton/Desktop/pytorch/tools/setup_helpers/cmake.py", line 331, in build

self.run(build_args, my_env)

File "/Users/adrienbitton/Desktop/pytorch/tools/setup_helpers/cmake.py", line 142, in run

check_call(command, cwd=self.build_dir, env=env)

File "/usr/local/Cellar/python/3.7.3/Frameworks/Python.framework/Versions/3.7/lib/python3.7/subprocess.py", line 347, in check_call

raise CalledProcessError(retcode, cmd)

subprocess.CalledProcessError: Command '['cmake', '--build', '.', '--target', 'install', '--config', 'Release', '--', '-j', '4']' returned non-zero exit status 1.