Hi Forum!

I decided to build pytorch on GTX660 graphics card (don’t ask why, I don’t know).

I got the idea from the article.

What I used:

NVIDIA Driver

474.30-desktop-win10-win11-64bit-international-dch-whql

cuda 10.2

cuda_10.2.89_441.22_win10.exe

2 updates (cuda_10.2.1_win10 and cuda_10.2.2_win10)

cudnn 8.7.0

cudnn-windows-x86_64-8.7.0.84_cuda10-archive

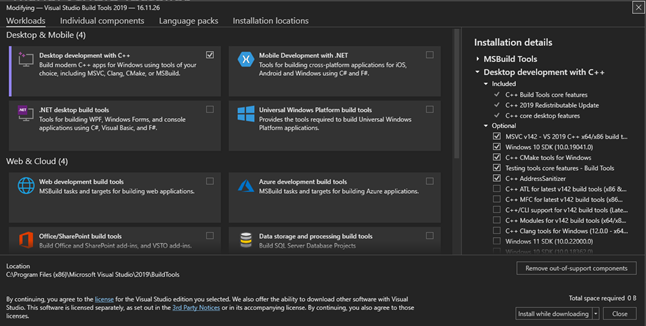

BuildTools 14.29.30133

magma

magma_2.5.4_cuda102_release

mkl

mkl_2020.2.254

Replaced onnx library

onnx-1.8.1

I ran into a lot of problems, most of them I managed, but when I got to the point of making C10. I got errors that I have no idea how to solve.

These are the creation logs of pytorch:

(D:\condaEnv) D:\Code\pytorch>python setup.py install --cmake

Building wheel torch-1.12.0a0+git664058f

-- Building version 1.12.0a0+git664058f

cmake -GNinja -DBUILD_PYTHON=True -DBUILD_TEST=True -DCMAKE_BUILD_TYPE=Release -DCMAKE_INCLUDE_PATH=D:\Pytorch_requirements\mkl\include -DCMAKE_INSTALL_PREFIX=D:\Code\pytorch\torch -DCMAKE_PREFIX_PATH=D:\condaEnv\Lib\site-packages -DMSVC_Z7_OVERRIDE=OFF -DNUMPY_INCLUDE_DIR=D:\condaEnv\Lib\site-packages\numpy\core\include -DPYTHON_EXECUTABLE=D:\condaEnv\python.exe -DPYTHON_INCLUDE_DIR=D:\condaEnv\Include -DPYTHON_LIBRARY=D:\condaEnv/libs/python311.lib -DTORCH_BUILD_VERSION=1.12.0a0+git664058f -DUSE_KINETO=0 -DUSE_NUMPY=True D:\Code\pytorch

-- The CXX compiler identification is MSVC 19.29.30148.0

-- The C compiler identification is MSVC 19.29.30148.0

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: C:/Program Files (x86)/Microsoft Visual Studio/2019/BuildTools/VC/Tools/MSVC/14.29.30133/bin/Hostx64/x64/cl.exe - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Check for working C compiler: C:/Program Files (x86)/Microsoft Visual Studio/2019/BuildTools/VC/Tools/MSVC/14.29.30133/bin/Hostx64/x64/cl.exe - skipped

-- Detecting C compile features

-- Detecting C compile features - done

-- Not forcing any particular BLAS to be found

CMake Warning (dev) at D:/condaEnv/Library/share/cmake-3.24/Modules/CMakeDependentOption.cmake:89 (message):

Policy CMP0127 is not set: cmake_dependent_option() supports full Condition

Syntax. Run "cmake --help-policy CMP0127" for policy details. Use the

cmake_policy command to set the policy and suppress this warning.

Call Stack (most recent call first):

CMakeLists.txt:259 (cmake_dependent_option)

This warning is for project developers. Use -Wno-dev to suppress it.

CMake Warning (dev) at D:/condaEnv/Library/share/cmake-3.24/Modules/CMakeDependentOption.cmake:89 (message):

Policy CMP0127 is not set: cmake_dependent_option() supports full Condition

Syntax. Run "cmake --help-policy CMP0127" for policy details. Use the

cmake_policy command to set the policy and suppress this warning.

Call Stack (most recent call first):

CMakeLists.txt:290 (cmake_dependent_option)

This warning is for project developers. Use -Wno-dev to suppress it.

CMake Warning at CMakeLists.txt:367 (message):

TensorPipe cannot be used on Windows. Set it to OFF

...

...

cmake --build . --target install --config Release -- -j 1

[2/759] Building CUDA object caffe2\CMakeFiles\torch_cuda.dir\__\aten\src\ATen\native\cuda\DepthwiseConv2d.cu.obj

FAILED: caffe2/CMakeFiles/torch_cuda.dir/__/aten/src/ATen/native/cuda/DepthwiseConv2d.cu.obj

C:\PROGRA~1\NVIDIA~2\CUDA\v10.2\bin\nvcc.exe -forward-unknown-to-host-compiler -DAT_PER_OPERATOR_HEADERS -DIDEEP_USE_MKL -DMINIZ_DISABLE_ZIP_READER_CRC32_CHECKS -DONNXIFI_ENABLE_EXT=1 -DONNX_ML=1 -DONNX_NAMESPACE=onnx_torch -DTORCH_CUDA_BUILD_MAIN_LIB -DUSE_C10D_GLOO -DUSE_CUDA -DUSE_DISTRIBUTED -DUSE_EXPERIMENTAL_CUDNN_V8_API -DUSE_EXTERNAL_MZCRC -DWIN32_LEAN_AND_MEAN -D_CRT_SECURE_NO_DEPRECATE=1 -D_OPENMP_NOFORCE_MANIFEST -Dtorch_cuda_EXPORTS -ID:\Code\pytorch\build\aten\src -ID:\Code\pytorch\aten\src -ID:\Code\pytorch\build -ID:\Code\pytorch -ID:\Code\pytorch\cmake\..\third_party\benchmark\include -ID:\Code\pytorch\cmake\..\third_party\cudnn_frontend\include -ID:\Code\pytorch\third_party\onnx -ID:\Code\pytorch\build\third_party\onnx -ID:\Code\pytorch\third_party\foxi -ID:\Code\pytorch\build\third_party\foxi -ID:\Code\pytorch\build\include -ID:\Code\pytorch\torch\csrc\distributed -ID:\Code\pytorch\aten\src\THC -ID:\Code\pytorch\aten\src\ATen\cuda -ID:\Code\pytorch\build\caffe2\aten\src -ID:\Code\pytorch\aten\..\third_party\catch\single_include -ID:\Code\pytorch\aten\src\ATen\.. -ID:\Code\pytorch\c10\cuda\..\.. -ID:\Code\pytorch\c10\.. -ID:\Code\pytorch\torch\csrc\api -ID:\Code\pytorch\torch\csrc\api\include -isystem=D:\Code\pytorch\build\third_party\gloo -isystem=D:\Code\pytorch\cmake\..\third_party\gloo -isystem=D:\Code\pytorch\cmake\..\third_party\googletest\googlemock\include -isystem=D:\Code\pytorch\cmake\..\third_party\googletest\googletest\include -isystem=D:\Code\pytorch\third_party\protobuf\src -isystem=D:\Pytorch_requirements\mkl\include -isystem=D:\Code\pytorch\third_party\XNNPACK\include -isystem=D:\Code\pytorch\cmake\..\third_party\eigen -isystem=D:\Code\pytorch\cmake\..\third_party\cub -isystem="C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10.2\include" -isystem=D:\Code\pytorch\third_party\ideep\mkl-dnn\third_party\oneDNN\include -isystem=D:\Code\pytorch\third_party\ideep\include -isystem="C:\Program Files\NVIDIA Corporation\NvToolsExt\include" -isystem=D:\Pytorch_requirements\magma\include -Xcompiler /w -w -Xfatbin -compress-all -DONNX_NAMESPACE=onnx_torch --use-local-env -gencode arch=compute_30,code=sm_30 -Xcudafe --diag_suppress=cc_clobber_ignored,--diag_suppress=integer_sign_change,--diag_suppress=useless_using_declaration,--diag_suppress=set_but_not_used,--diag_suppress=field_without_dll_interface,--diag_suppress=base_class_has_different_dll_interface,--diag_suppress=dll_interface_conflict_none_assumed,--diag_suppress=dll_interface_conflict_dllexport_assumed,--diag_suppress=implicit_return_from_non_void_function,--diag_suppress=unsigned_compare_with_zero,--diag_suppress=declared_but_not_referenced,--diag_suppress=bad_friend_decl --Werror cross-execution-space-call --no-host-device-move-forward --expt-relaxed-constexpr --expt-extended-lambda -Xcompiler=/wd4819,/wd4503,/wd4190,/wd4244,/wd4251,/wd4275,/wd4522 -Wno-deprecated-gpu-targets --expt-extended-lambda -DCUDA_HAS_FP16=1 -D__CUDA_NO_HALF_OPERATORS__ -D__CUDA_NO_HALF_CONVERSIONS__ -D__CUDA_NO_HALF2_OPERATORS__ -D__CUDA_NO_BFLOAT16_CONVERSIONS__ -Xcompiler="-MD -O2 -Ob2" -DNDEBUG -Xcompiler /MD -DCAFFE2_USE_GLOO -DTH_HAVE_THREAD -Xcompiler= -DTORCH_CUDA_BUILD_MAIN_LIB -std=c++14 -MD -MT caffe2\CMakeFiles\torch_cuda.dir\__\aten\src\ATen\native\cuda\DepthwiseConv2d.cu.obj -MF caffe2\CMakeFiles\torch_cuda.dir\__\aten\src\ATen\native\cuda\DepthwiseConv2d.cu.obj.d -x cu -c D:\Code\pytorch\aten\src\ATen\native\cuda\DepthwiseConv2d.cu -o caffe2\CMakeFiles\torch_cuda.dir\__\aten\src\ATen\native\cuda\DepthwiseConv2d.cu.obj -Xcompiler=-Fdcaffe2\CMakeFiles\torch_cuda.dir\,-FS

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::TensorType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::TensorType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::NoneType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::NoneType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::BoolType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::BoolType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::IntType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::IntType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::FloatType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::FloatType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::SymIntType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::SymIntType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::ComplexType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::ComplexType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::NumberType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::NumberType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::StringType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::StringType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::ListType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::ListType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::TupleType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::TupleType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::DictType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::DictType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::ClassType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::ClassType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::OptionalType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::OptionalType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::AnyListType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::AnyListType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::AnyTupleType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::AnyTupleType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::DeviceObjType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::DeviceObjType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::StreamObjType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::StreamObjType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::CapsuleType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::CapsuleType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::GeneratorType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::GeneratorType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::StorageType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::StorageType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::VarType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::VarType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::AnyClassType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::AnyClassType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::QSchemeType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::QSchemeType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::QuantizerType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::QuantizerType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::AnyEnumType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::AnyEnumType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::RRefType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::RRefType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::FutureType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::FutureType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: member "c10::DynamicTypeTrait<c10::AnyType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(235): note: the value of member "c10::DynamicTypeTrait<c10::AnyType>::isBaseType"

(235): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(236): error: member "c10::DynamicTypeTrait<c10::ScalarTypeType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(236): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(236): note: the value of member "c10::DynamicTypeTrait<c10::ScalarTypeType>::isBaseType"

(236): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(236): error: member "c10::DynamicTypeTrait<c10::LayoutType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(236): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(236): note: the value of member "c10::DynamicTypeTrait<c10::LayoutType>::isBaseType"

(236): here cannot be used as a constant

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(236): error: member "c10::DynamicTypeTrait<c10::MemoryFormatType>::isBaseType" may not be initialized

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(236): error: expression must have a constant value

D:/Code/pytorch/aten/src\ATen/core/dynamic_type.h(236): note: the value of member "c10::DynamicTypeTrait<c10::MemoryFormatType>::isBaseType"

(236): here cannot be used as a constant

64 errors detected in the compilation of "C:/Users/PromiX/AppData/Local/Temp/tmpxft_00001d88_00000000-7_DepthwiseConv2d.cpp1.ii".

nvcc warning : The -std=c++14 flag is not supported with the configured host compiler. Flag will be ignored.

DepthwiseConv2d.cu

ninja: build stopped: subcommand failed

tmpxft_000010e8_00000000-0

(D:\condaEnv) D:\Code\pytorch\build\CMakeFiles\ShowIncludes>call "C:/Program Files (x86)/Microsoft Visual Studio/2019/BuildTools/VC/Tools/MSVC/14.29.30133/bin/HostX64/x64/../../../../../../../VC/Auxiliary/Build/vcvars64.bat"

**********************************************************************

** Visual Studio 2019 Developer Command Prompt v16.11.26

** Copyright (c) 2021 Microsoft Corporation

**********************************************************************

[vcvarsall.bat] Environment initialized for: 'x64'

FULL LOG

I would be glad if somebody could tell me what the error could be.

Thank you in advance.