When I install the Pytorch package from source, it reports the following problem.

My platform is: Ubuntu 16.06 + CUDA 7.5

[ 1%] Linking CXX shared library libTHCUNN.so

[100%] Built target THCUNN

Install the project…

– Install configuration: “Release”

– Installing: /home/yuhang/pytorch/pytorch-master/torch/lib/tmp_install/lib/libTHCUNN.so.1

– Installing: /home/yuhang/pytorch/pytorch-master/torch/lib/tmp_install/lib/libTHCUNN.so

– Set runtime path of “/home/yuhang/pytorch/pytorch-master/torch/lib/tmp_install/lib/libTHCUNN.so.1” to “”

– Up-to-date: /home/yuhang/pytorch/pytorch-master/torch/lib/tmp_install/include/THCUNN/THCUNN.h

– Up-to-date: /home/yuhang/pytorch/pytorch-master/torch/lib/tmp_install/include/THCUNN/generic/THCUNN.h

– Configuring done

– Generating done

– Build files have been written to: /home/yuhang/pytorch/pytorch-master/torch/lib/build/nccl

[100%] Generating lib/libnccl.so

Compiling src/libwrap.cu > /home/yuhang/pytorch/pytorch-master/torch/lib/build/nccl/obj/libwrap.o

Compiling src/core.cu > /home/yuhang/pytorch/pytorch-master/torch/lib/build/nccl/obj/core.o

Compiling src/all_gather.cu > /home/yuhang/pytorch/pytorch-master/torch/lib/build/nccl/obj/all_gather.o

Compiling src/all_reduce.cu > /home/yuhang/pytorch/pytorch-master/torch/lib/build/nccl/obj/all_reduce.o

Compiling src/broadcast.cu > /home/yuhang/pytorch/pytorch-master/torch/lib/build/nccl/obj/broadcast.o

Compiling src/reduce_scatter.cu > /home/yuhang/pytorch/pytorch-master/torch/lib/build/nccl/obj/reduce_scatter.o

Compiling src/reduce.cu > /home/yuhang/pytorch/pytorch-master/torch/lib/build/nccl/obj/reduce.o

ptxas warning : Too big maxrregcount value specified 96, will be ignored

ptxas warning : Too big maxrregcount value specified 96, will be ignored

ptxas warning : Too big maxrregcount value specified 96, will be ignored

ptxas warning : Too big maxrregcount value specified 96, will be ignored

/usr/include/string.h: In function ‘void* __mempcpy_inline(void*, const void*, size_t)’:

/usr/include/string.h:652:42: error: ‘memcpy’ was not declared in this scope

** return (char ) memcpy (__dest, __src, __n) + __n;*

** ^**

/usr/include/string.h: In function ‘void __mempcpy_inline(void, const void*, size_t)’:**

/usr/include/string.h:652:42: error: ‘memcpy’ was not declared in this scope

** return (char ) memcpy (__dest, __src, __n) + __n;*

Note that in Caffe installation, similar problem can be solved in here:

https://groups.google.com/forum/#!msg/caffe-users/Tm3OsZBwN9Q/XKGRKNdmBAAJ

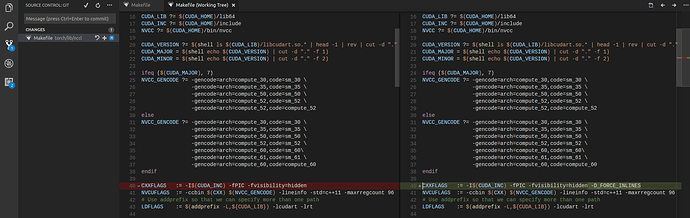

By changing the CMakeLists.txt, I wonder whether we have some similar solutions in Pytorch.

Thanks,

Yuhang