I’ve been massaging the data that the profiler outputs so that all of the calls to a particular function are summed together, so my output looks like so:

…

mul : CPU time 170430.96 calls 844

addmm : CPU time 171562.23 calls 70

mm : CPU time 377847.61 calls 839

matmul : CPU time 379620.04 calls 839

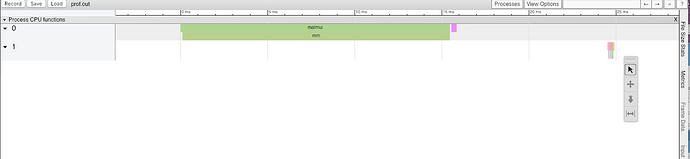

Now, what I’m wondering is mm and matmul have exactly the same number of calls and and CPU time is very close as well - I’m wondering if matmul is actually calling mm here? Also at the end I sum all of the CPU times together to get a total CPU time for all the calls in the profile - is that correct? (if matmul is actually calling mm then it would not be correct).

Are there any options to the profiler to get this kind of data about total calls?