Hello!

I am trying to use a pytorch model with Metal on iOS, but I keep getting these errors:

2021-12-04 23:40:04.794982+0100 ObjectDetection[1438:328965] 'memory_format' argument is incompatible with Metal tensor

Debug info for handle(s): -1, was not found.

Exception raised from empty at /Users/distiller/project/aten/src/ATen/native/metal/MetalAten.mm:84 (most recent call first):

frame #0: _ZN3c106detail14torchCheckFailEPKcS2_jS2_ + 92 (0x104f03b04 in ObjectDetection)

frame #1: _ZN2at6native5metal5emptyEN3c108ArrayRefIxEENS2_8optionalINS2_10ScalarTypeEEENS5_INS2_6LayoutEEENS5_INS2_6DeviceEEENS5_IbEENS5_INS2_12MemoryFormatEEE + 284 (0x104d1ca5c in ObjectDetection)

frame #2: _ZN2at12_GLOBAL__N_119empty_memory_formatEN3c108ArrayRefIxEENS1_8optionalINS1_10ScalarTypeEEENS4_INS1_6LayoutEEENS4_INS1_6DeviceEEENS4_IbEENS4_INS1_12MemoryFormatEEE + 220 (0x10428e2fc in ObjectDetection)

frame #3: _ZNK3c1010Dispatcher4callIN2at6TensorEJNS_8ArrayRefIxEENS_8optionalINS_10ScalarTypeEEENS6_INS_6LayoutEEENS6_INS_6DeviceEEENS6_IbEENS6_INS_12MemoryFormatEEEEEET_RKNS_19TypedOperatorHandleIFSG_DpT0_EEESJ_ + 220 (0x10410b9dc in ObjectDetection)

frame #4: _ZN2at4_ops19empty_memory_format4callEN3c108ArrayRefIxEENS2_8optionalINS2_10ScalarTypeEEENS5_INS2_6LayoutEEENS5_INS2_6DeviceEEENS5_IbEENS5_INS2_12MemoryFormatEEE + 144 (0x1040d00d0 in ObjectDetection)

frame #5: _ZN2at12_GLOBAL__N_141structured_elu_default_backend_functional10set_outputExN3c108ArrayRefIxEES4_NS2_13TensorOptionsENS3_INS_7DimnameEEE + 152 (0x1043d2968 in ObjectDetection)

frame #6: _ZN2at18TensorIteratorBase11fast_set_upERKNS_20TensorIteratorConfigE + 268 (0x104d1a424 in ObjectDetection)

frame #7: _ZN2at18TensorIteratorBase5buildERNS_20TensorIteratorConfigE + 152 (0x104d17d38 in ObjectDetection)

frame #8: _ZN2at18TensorIteratorBase14build_unary_opERKNS_10TensorBaseES3_ + 152 (0x104d187cc in ObjectDetection)

frame #9: _ZN2at12_GLOBAL__N_111wrapper_eluERKNS_6TensorERKN3c106ScalarES7_S7_ + 136 (0x10437d744 in ObjectDetection)

frame #10: _ZN3c104impl34call_functor_with_args_from_stack_INS0_6detail31WrapFunctionIntoRuntimeFunctor_IPFN2at6TensorERKS5_RKNS_6ScalarESA_SA_ES5_NS_4guts8typelist8typelistIJS7_SA_SA_SA_EEEEELb0EJLm0ELm1ELm2ELm3EEJS7_SA_SA_SA_EEENSt3__15decayINSD_21infer_function_traitsIT_E4type11return_typeEE4typeEPNS_14OperatorKernelENS_14DispatchKeySetEPNSI_6vectorINS_6IValueENSI_9allocatorISU_EEEENSI_16integer_sequenceImJXspT1_EEEEPNSF_IJDpT2_EEE + 140 (0x10432c65c in ObjectDetection)

frame #11: _ZN3c104impl31make_boxed_from_unboxed_functorINS0_6detail31WrapFunctionIntoRuntimeFunctor_IPFN2at6TensorERKS5_RKNS_6ScalarESA_SA_ES5_NS_4guts8typelist8typelistIJS7_SA_SA_SA_EEEEELb0EE4callEPNS_14OperatorKernelERKNS_14OperatorHandleENS_14DispatchKeySetEPNSt3__16vectorINS_6IValueENSP_9allocatorISR_EEEE + 40 (0x10432c56c in ObjectDetection)

frame #12: _ZNK3c1010Dispatcher9callBoxedERKNS_14OperatorHandleEPNSt3__16vectorINS_6IValueENS4_9allocatorIS6_EEEE + 128 (0x104deb470 in ObjectDetection)

frame #13: _ZNSt3__110__function6__funcIZN5torch3jit6mobile8Function15append_operatorERKNS_12basic_stringIcNS_11char_traitsIcEENS_9allocatorIcEEEESD_RKN3c108optionalIiEExE3$_3NS9_ISJ_EEFvRNS_6vectorINSE_6IValueENS9_ISM_EEEEEEclESP_ + 668 (0x104debfa8 in ObjectDetection)

frame #14: _ZN5torch3jit6mobile16InterpreterState3runERNSt3__16vectorIN3c106IValueENS3_9allocatorIS6_EEEE + 4200 (0x104df6134 in ObjectDetection)

frame #15: _ZNK5torch3jit6mobile8Function3runERNSt3__16vectorIN3c106IValueENS3_9allocatorIS6_EEEE + 192 (0x104dea584 in ObjectDetection)

frame #16: _ZNK5torch3jit6mobile6Method3runERNSt3__16vectorIN3c106IValueENS3_9allocatorIS6_EEEE + 544 (0x104dfcf50 in ObjectDetection)

frame #17: _ZNK5torch3jit6mobile6MethodclENSt3__16vectorIN3c106IValueENS3_9allocatorIS6_EEEE + 24 (0x104dfdad0 in ObjectDetection)

frame #18: _ZN5torch3jit6mobile6Module7forwardENSt3__16vectorIN3c106IValueENS3_9allocatorIS6_EEEE + 148 (0x104eb23a8 in ObjectDetection)

frame #19: -[InferenceModule + + (0x104eb1b20 in ObjectDetection)

frame #20: $s15ObjectDetection14ViewControllerC9runTappedyyypFyycfU_ + 1072 (0x104ecc234 in ObjectDetection)

frame #21: $sIeg_IeyB_TR + 48 (0x104ebb15c in ObjectDetection)

frame #22: _dispatch_call_block_and_release + 24 (0x1068e7ce4 in libdispatch.dylib)

frame #23: _dispatch_client_callout + 16 (0x1068e9528 in libdispatch.dylib)

frame #24: _dispatch_queue_override_invoke + 888 (0x1068ebcc4 in libdispatch.dylib)

frame #25: _dispatch_root_queue_drain + 376 (0x1068fb048 in libdispatch.dylib)

frame #26: _dispatch_worker_thread2 + 152 (0x1068fb970 in libdispatch.dylib)

frame #27: _pthread_wqthread + 212 (0x1d24be568 in libsystem_pthread.dylib)

frame #28: start_wqthread + 8 (0x1d24c1874 in libsystem_pthread.dylib)

I searched for answers, and found this issue. The solution was that the Pytorch backend doesn’t support quantized models. However, I think that’s not the problem in my case. I use this model, and I could not find any signs of quantization. Furthermore, in this paper, the creator of the model ends the conclusion with these words:

Some techniques may further improve performance and accuracy, such as quantization, pruning, knowledge distillation. We left them for the future research.

I also print out the is_quantized property every time the output is returned, and it’s false every single time.

The forward function:

def forward(self, x):

backbone_features = self.model(x)

backbone_features = self.cpm(backbone_features)

stages_output = self.initial_stage(backbone_features)

for refinement_stage in self.refinement_stages:

stages_output.extend(

refinement_stage(torch.cat([backbone_features, stages_output[-2], stages_output[-1]], dim=1)))

for i, tensor in enumerate(stages_output):

print(f'output {i} shape = {tensor.shape}')

print(f'output {i} is_quantized: {tensor.is_quantized}')

print("--------------------next frame -----------------------")

return stages_output

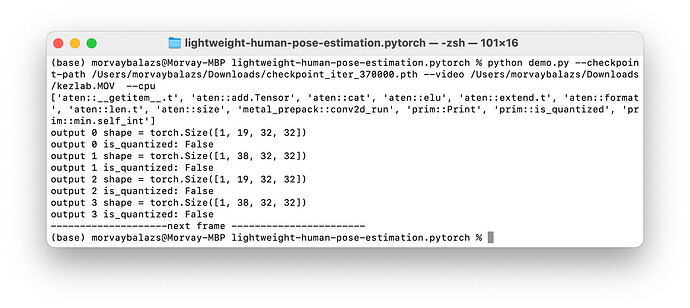

The output of the prints:

Inference code on the iOS side:

- (NSArray<NSNumber*>*)detectImage:(void*)imageBuffer {

try {

at::Tensor tensor = torch::from_blob(imageBuffer, { 1, 3, input_height, input_width }, at::kFloat).metal();

c10::InferenceMode guard;

CFTimeInterval startTime = CACurrentMediaTime();

auto outputTensorList = _impl.forward({ tensor }).toTensorList();

CFTimeInterval elapsedTime = CACurrentMediaTime() - startTime;

NSLog(@"inference time:%f", elapsedTime);

auto heatmap1 = outputTensorList.get(0).dequantize().cpu();

// outputs 19 pieces of 80*80 matrices

auto heatmap_pixels = heatmap1[0][18]; // the 19th heatmap, 80*80

auto dim1 = heatmap_pixels.size(0);

auto dim2 = heatmap_pixels.size(1);

float* floatBuffer = heatmap_pixels.data_ptr<float>();

if (!floatBuffer) {

return nil;

}

NSMutableArray* results = [[NSMutableArray alloc] init];

for (int i = 0; i < (dim1*dim2); i++) {

[results addObject:@(floatBuffer[i])];

}

return [results copy];

} catch (const std::exception& exception) {

NSLog(@"%s", exception.what());

}

return nil;

}

The model saving code:

net = PoseEstimationWithMobileNet()

checkpoint = torch.load(args.checkpoint_path, map_location='cpu')

load_state(net, checkpoint)

torch.save(net.state_dict(), '/Users/morvaybalazs/PycharmProjects/model.pt')

model = PoseEstimationWithMobileNet()

model.load_state_dict(torch.load('/Users/morvaybalazs/PycharmProjects/model.pt'))

model.eval()

traced_script_module = torch.jit.script(model)

from torch.utils.mobile_optimizer import optimize_for_mobile

torchscript_model_optimized = optimize_for_mobile(traced_script_module, backend='metal')

print(torch.jit.export_opnames(torchscript_model_optimized))

torchscript_model_optimized._save_for_lite_interpreter("mobile_model_metal.pt")

The exception is thrown when the forward function gets called.

Is it possible, that the model still uses quantization?

I am a beginner in Python and Pytorch, so any help or comment is much appreciated!