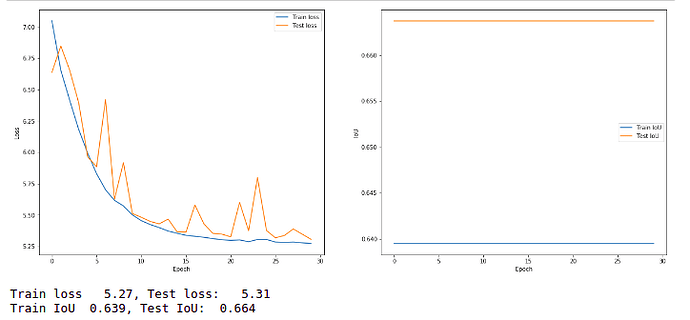

Hi all, I have faced with a maybe funny but serious problem. my model is U-Net, and metric is IoU as below. during training the loss is decreasing but IoU stays in a fixed number and doesn’t move. Btw, I have really small dataset(30 image for train and 10 for val) just for debugging my code. but will increase the dataset size later. does dataset size can be reason? or what do I do wrong?!

Thanks for your helps in advance

def calc_iou(prediction, ground_truth):

n_images = len(prediction)

intersection, union = 0, 0

for i in range(n_images):

intersection += np.logical_and(prediction[i] > 0, ground_truth[i] > 0).astype(np.float32).sum()

union += np.logical_or(prediction[i] > 0, ground_truth[i] > 0).astype(np.float32).sum()

return float(intersection) / union

also I tested IoU for given random array and it works fine

ground_truth = np.random.rand(1, 480,3760)

prediction = ground_truth

calc_iou(prediction, ground_truth) = 1

here is also my train function:

def train(model, optimizer, batch_size=32, num_epochs=100, silent=False, model_name='UNet'):

train_loss_plot = []

test_loss_plot = []

train_accur_plot = []

test_accur_plot = []

for epoch in range(num_epochs):

if (not silent):

print("Epoch {:2}: ".format(epoch), end='')

else:

print('.', end='')

model.train()

total_train_loss = 0

correct_examples_train = 0

for batch_idx, data in enumerate(tqdm(train_dataloader)):

x, y = data

x, y = x.to(device), y.to(device)

optimizer.zero_grad()

pred = model(x)

loss = criterion(pred.view(pred.shape[0], -1), y.view(y.shape[0], -1).float()) * batch_size

loss.backward()

optimizer.step()

# schedular.step()

total_train_loss += loss.item()

correct_examples_train += calc_iou(pred.cpu().detach().numpy(),

y.cpu().detach().numpy()) * batch_size

model.eval()

correct_examples_test = 0

total_test_loss = 0

for batch_idx, data in enumerate(test_dataloader):

x_, y_ = data

x_, y_ = x_.to(device), y_.to(device)

pred_ = model(x_)

loss_ = criterion(pred_.view(pred_.shape[0], -1), y_.view(y_.shape[0], -1).float()) * batch_size

total_test_loss += loss_.item()

correct_examples_test += calc_iou(pred_.cpu().detach().numpy(),

y_.cpu().detach().numpy()) * batch_size

train_loss_plot.append(total_train_loss / len(train_loader))

test_loss_plot.append(total_test_loss / len(val_loader))

train_accur_plot.append(correct_examples_train / len(train_loader))

test_accur_plot.append(correct_examples_test / len(val_loader))

if (not silent):

display.clear_output(wait=True)

plt.figure(figsize=(20, 8))

plt.subplot(1,2,1)

plt.plot(range(len(train_loss_plot)), train_loss_plot)

plt.plot(range(len(test_loss_plot)), test_loss_plot)

plt.legend(['Train loss', 'Test loss'])

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.subplot(1,2,2)

plt.plot(range(len(train_accur_plot)), train_accur_plot)

plt.plot(range(len(test_accur_plot)), test_accur_plot)

plt.legend(['Train IoU', 'Test IoU'])

plt.xlabel('Epoch')

plt.ylabel('IoU')

plt.show()

print('Train loss {:6.3}, Test loss: {:6.3}'\

.format(total_train_loss / len(train_loader),

total_test_loss / len(val_loader)))

print('Train IoU {:6.3}, Test IoU: {:6.3}'\

.format(correct_examples_train / len(train_loader),

correct_examples_test / len(val_loader)))

torch.save(model.state_dict(), model_name + '_best.pth')

train(model, optimizer, batch_size=8, num_epochs=30)