Why is bias not quantized upon pytorch static quantization? Or is it not required for deployment?

if you break down the quantized operations into the integer components used to speed up the computation, all the integer stuff happens before the bias is added in so it wouldn’t speed anything up.

bias_int32 = torch.quantize_per_tensor(bias_float_vector, scale=S1*S2, zero_point=0, torch.qint32)

Is this what happens during inference?

At a high level it depends on the kernel/backend being used, you’d be better off asking the fbgemm or qnnpack folks. At a lower level though I believe the broad strokes are correct.

Here is a document that may be more helpful: gemmlowp/quantization.md at master · google/gemmlowp · GitHub

yeah this is true, we would quantize the bias with the scale of input and weight.

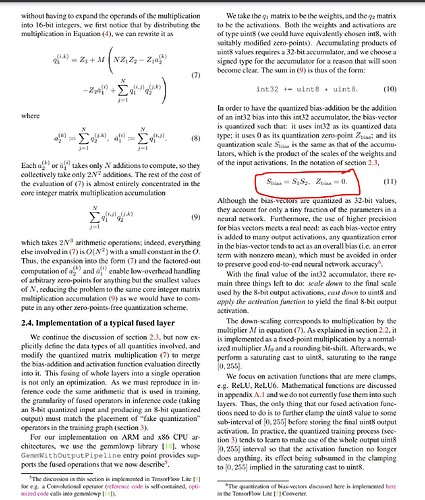

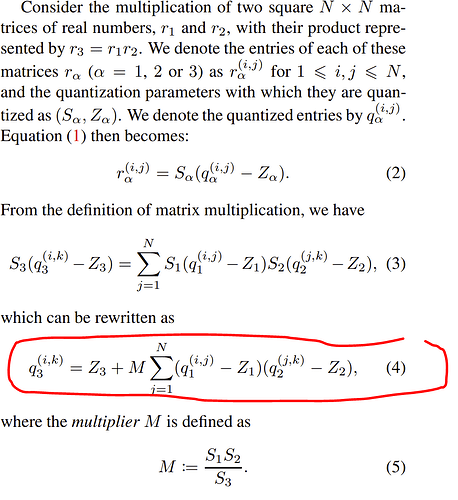

This is what we do, from our internal notes:

z = qconv(wq, xq)

# z is at scale (weight_scale*input_scale) and at int32

# Convert to int32 and perform 32 bit add

bias_q = round(bias/(input_scale*weight_scale))

z_int = z + bias_q

# rounding to 8 bits

z_out = round[(z_int)*(input_scale*weight_scale)/output_scale) - z_zero_point]

z_out = saturate(z_out)

Not exactly sure if we add bias with floating point add or int32 add, but it’s one of them.

We’ll add documentations for this somewhere in the future.

btw, if you want to do quantization differently, e.g. like passing in int32 bias, and evaluate the impact on accuracy, here is the design that support this: rfcs/RFC-0019-Extending-PyTorch-Quantization-to-Custom-Backends.md at master · pytorch/rfcs · GitHub, this will be more mature in beta release

Thanks for your reply.

Can you tell me the equivalent equation for the residual_block skip addition operation?

- bias_q = round(bias/(input_scale*weight_scale))

bias_q = torch.quantize_per_tensor(bias_float_vector, scale=input_scale*weight_scale, zero_point=0, dtype=torch.qint32)

Is this code-snippet true for the above statement? I wanted to verify with you.

yeah, and this happens in the quantized operator itself, we still just pass in float bias to the quantized operator

there’s also a clamp operation, but otherwise yes the integer values of the quantize_per_tensor output will match the output of the other equation.

you mean the formula for quantized add? I think you can derive through the definition of quantization function and add:

out_int8 = out_fp32 / out_scale + out_zero_point

= (a_fp32 + b_fp32) / out_scale + out_zero_point

= ((a_int8 - a_zero_point) * a_scale + (b_int8 - b_zero_point) * b_scale)/out_scale + out_zero_point

= …

sir, do you know how to quantize bias in per-channel quantization weight ? , i mean the scale parameter for bias

not sure I understand, only bias I know is the bias parameter for convolution or linear modules, is that what you are referring to?

yes, the bias parameter

OK, we support it in FX Graph Mode Quantization, both prepare_fx and convert_fx takes an argument called BackendConfig: prepare_fx — PyTorch master documentation, and here is the doc for BackendConfig: BackendConfig — PyTorch master documentation, you can change the DTypeConfig setting for linear op. it will be something like:

weighted_int8_dtype_config = DTypeConfig(

input_dtype=torch.quint8,

output_dtype=torch.quint8,

weight_dtype=torch.qint8,

**bias_type=torch.quint8**)

if you want to quantize bias to quint8.

Please help with the issues observed here How to generate a fully-quantized model? with

weighted_int8_dtype_config = DTypeConfig(

input_dtype=torch.quint8,

output_dtype=torch.quint8,

weight_dtype=torch.qint8,

bias_dtype=torch.qint32)

conv_config = BackendPatternConfig(torch.nn.Conv2d).add_dtype_config(weighted_int8_dtype_config)

backend_config = BackendConfig("full_int_backend").set_backend_pattern_config(conv_config)

maybe you can try our new workflow: (prototype) PyTorch 2.0 Export Post Training Static Quantization — PyTorch Tutorials 2.0.1+cu117 documentation