I wanted to test the effect of dropout at test time (I am using PyTorch 0.4). Simply:

dropout was set to p=0.5 before training (Fine-Tuning). I am using ResNet-152 (pre-trained with ImageNet). Using Cifar-100, I trained the model for 15 epochs; then, at test time, I tried to use different p values.

P = [0, 0.2, 0.5, 0.7, 1]

for p in P:

model.dropout.p = p

test(test_loader) # prints accuracy

Printed output:

40.5% 40.5% 40.5% 40.5% 40.5%

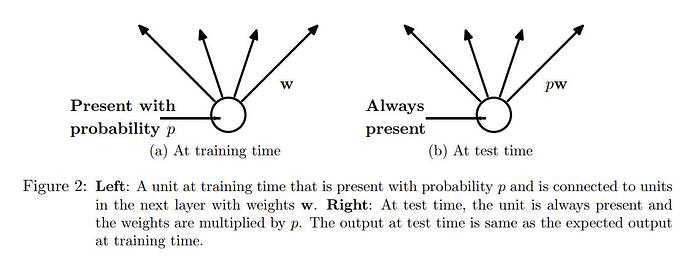

As shown in Dropout: A Simple Way to Prevent Neural Networks from Overfitting , the p values should affect the output at test time.

Seems dropout has no effect at the testing. Or, am I missing something here?