What is the difference between embedding layers and linear layers, and how it works in nlp?

Embedding layers are used as trainable “lookup” tables using the input as indices, while linear layers apply a matrix multiplication using the input and the internal trainable weight parameter and add the bias to the result afterwards.

That means I can place the embedded layers in the model and output it through the linear layer?

Yes, you can use the output of embedding layers in linear layers as seen here:

num_embeddings = 10

embedding_dim= 100

emb = nn.Embedding(num_embeddings, embedding_dim)

output_dim = 5

lin = nn.Linear(embedding_dim, output_dim)

batch_size = 2

x = torch.randint(0, num_embeddings, (batch_size,))

out = emb(x)

print(out.shape)

# torch.Size([2, 100])

out = lin(out)

print(out.shape)

# torch.Size([2, 5])

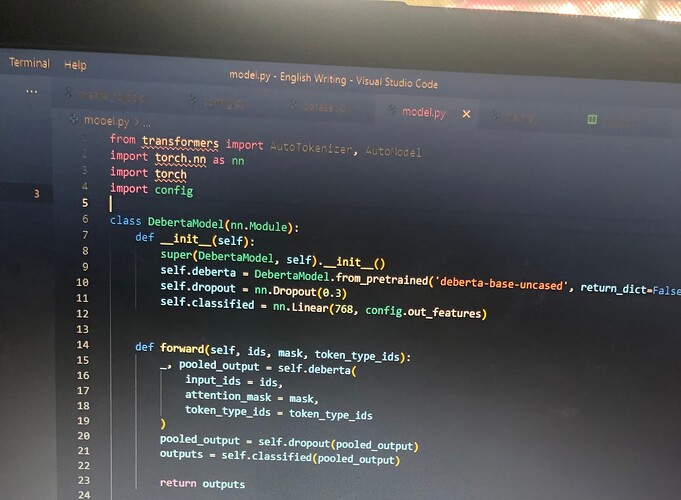

Thanks, but after placing it in the model, work with multioutput classification, I needed to output 6 target variables using nn.sequential in Bert model, but received an error saying nn.sequential doesn’t work with multiple inputs?

That’s correct. nn.Sequential expects one input and will yield one output.

You can check other topics discussing this feature (e.g. here) which describe some workarounds.

Then I can just do it without nn.sequential