Is it possible to do the task of softmax layer in pytorch, I know Tensorflow can do it

Could you explain your use case and your concerns a bit?

Which task would you like to use with a softmax layer?

PyTorch has a softmax implementation, but I’m not sure I understand your issue completely.

I mean How to apply softmax layer in PyTorch. @ptrblck

You can apply it as any other layer:

softmax = nn.Softmax(dim=1)

x = torch.randn(2, 10)

output = softmax(x)

As the final layer? Right? @ptrblck

It depends on your use case and e.g. the loss function you are using.

nn.CrossEntropyLoss expects raw logits for example, while nn.NLLLoss expects log probabilities (F.log_softmax applied to the last layer).

Without some more information it is hard to answer your general questions.

Without softmax layer, in mobile I need to do the task about the softmax layer. Now I wanna accomplished the task about the softmax layer in model, I searched the doc and then cannot find the documents to tell me how to do it. By the way I have a doubt about convert input type parameters, how to convert the multiArray to the image, I did a project about image classifier ,Thank for your replies! @ptrblck

How did you define this model?

Did you export a PyTorch model to ONNX or did you take another path?

Yeah I convert the Model of PyTorch to onnx and then convert it to CoreML model. Based on the demo apple offered the model deal with the softmax layer and then only show the result. But the model only needs to deal with the multiArray to accomplish softmax layer work.

I mean any links to deal with the case.

- 1,Change the input and output type - change input to image and then convert output to dictionary({string:float}

Could you post a link to the demo, please?

I don’t have a demo,I received one model from PyTorch. and then it doesn’t do softmax layer task. Now I want to do it in Pytorch model.

I was referring to this demo you mentioned.

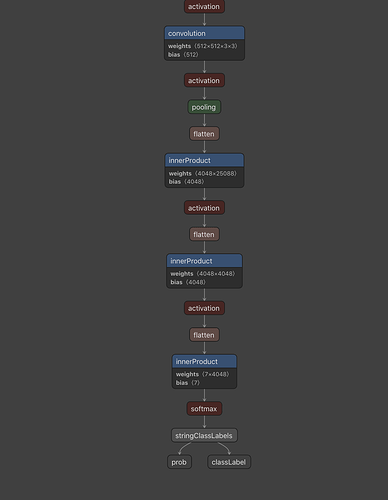

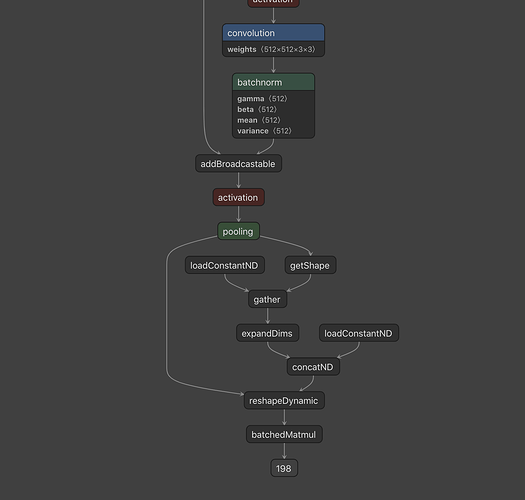

Based on the graph you’ve posted I assume it’s a CoreML graph, as these operations do not seem to come from PyTorch directly.

Could you explain your use case completely, i.e. what kind of model or code are you using now and what are you trying to achieve?

I used mlmodel from PyTorch- convert PyTorch to onnx and then convert it to mlmodel.

I don’t want to do the task of softmax layer in the field of mobile, so I hope let Machine learning engineer to change the code to do the softmax layer .

For instance how to change the input and output type.I did image classifier .

Could you post a (minimal) code snippet to reproduce your workflow or link to a repository?

I’m currently unsure how to help without seeing what you actually did.

If you cannot publish the model, just create a dummy model with a similar architecture.

Sorry,perhaps my description is fuzzy.

For me I only want to solve this issue.

The result of precondition is multiArray, when I received the results, I need to deal with it on the mobile. For me I don’t want to do the task, so I know it’s best practices to do in the model.

@ptrblck I mean don’t do the softmax layer in the mobile, is it best practices to in the pytorch?

It depends on your actual use case and the applied loss function.

For a multi-class classification problem, you wouldn’t use a softmax layer as your activation output, but pass the raw logits into nn.CrossEntropyLoss (or use F.log_softmax and nn.NLLLoss alternatively).

That being said, I’m still unsure, how the graphs were created and if any conversion library added these softmax layers or where these layers are generally coming from.

The project is a image classifier -emotion.

And then I want to convert the result to dict , but unfortunately the forward() function cannot return dict, how to do it

You can return dicts in your forward method:

class MyModel(nn.Module):

def __init__(self):

super(MyModel, self).__init__()

self.fc1 = nn.Linear(1, 1)

self.fc2 = nn.Linear(1, 1)

def forward(self, x):

x1 = self.fc1(x)

x2 = self.fc2(x)

return {'x1': x1, 'x2': x2}

model = MyModel()

out_dict = model(torch.randn(1, 1))

print(out_dict)

> {'x1': tensor([[0.5168]], grad_fn=<AddmmBackward>), 'x2': tensor([[0.2288]], grad_fn=<AddmmBackward>)}

Yeah absolutely it works.