Problem

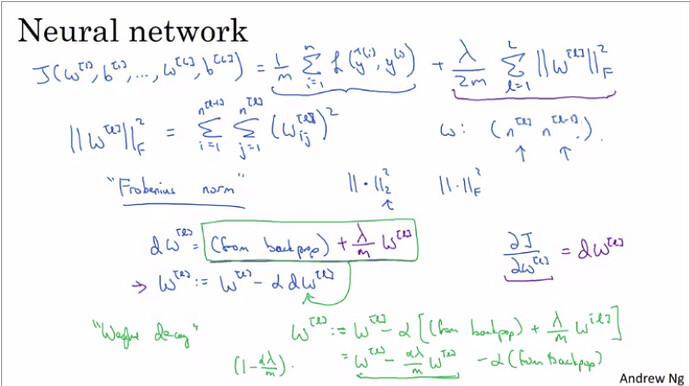

I am following Andrew Ng’s deep learning course on Coursera. He warns that forgetting adding L2 regularization term into loss function might lead to wrong conclusions about convergence.

I know the L2 regularization could be implemented through

weight_decay argument in Adam(model.parameters(), lr=1e-4, weight_decay=1.0). However, I am not sure

- Whether regularization term is somehow automatically added to loss value when

weight_decayis not 0? - If the answer to the first point is “no” and I add regularization through something like following, would it be like I add double regularization to my network?

for block in model.children():

loss += torch.norm(block.weight)

- The second point assumes that blocks are all

nn.Linear, but what if I would like to get the true loss term for CNN and LSTM (whereblock.weightmight be replaced to architecture-specific weight tensor).