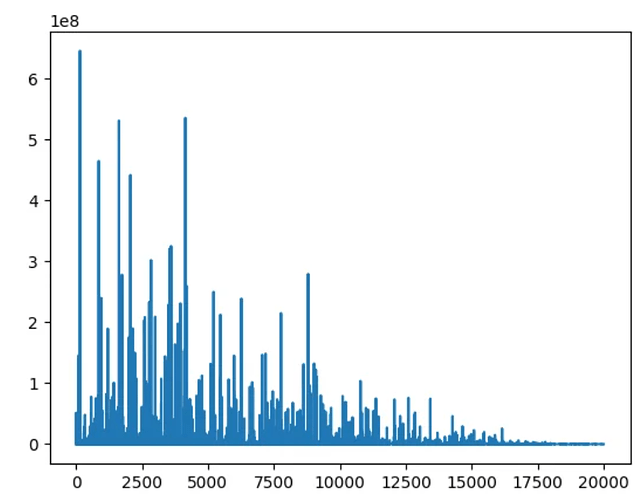

my loss changes along iteration as the figure.

Is my loss normal?

I use “optimizer = optim.SGD(parameters, lr = args.learning_rate, weight_decay = args.weight_decay_optimizer)”, and I train three standalone models simultaneously (the loss depends on all three models dont share any parameters).

Why my loss trend differs from the curves at many papers which decrease in a stable manner?