I’m having an issue where after loading from my checkpoint my model is not giving me the same results. I’ve tested the same exact code in training and that works as expected, but after loading from a checkpoint I get a completely different result.

I think I have narrowed it down to the nn.embedding layer; I’ve checked to ensure that the embedding layer gives the same weights, but somehow when I look for a specific vocab in the embedding layer, I get different results during training vs inference with the same code.

For example, here I am saving the weights

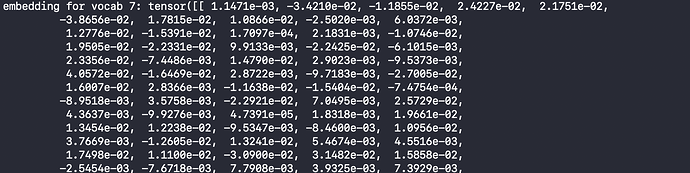

and I get the embedding for vocab 7:

But after loading even though my weights are the same (titled Parameter containing: ), my embedding for vocab 7 is different!

Is there something I’m not aware of or is this expected behavior (some precision issue?) and this is a red herring.