The implementation for basic Weight Drop in the PyTorch NLP source code is as follows:

def _weight_drop(module, weights, dropout):

"""

Helper for `WeightDrop`.

"""

for name_w in weights:

w = getattr(module, name_w)

del module._parameters[name_w]

module.register_parameter(name_w + '_raw', Parameter(w))

original_module_forward = module.forward

def forward(*args, **kwargs):

for name_w in weights:

raw_w = getattr(module, name_w + '_raw')

w = torch.nn.functional.dropout(raw_w, p=dropout, training=module.training)

setattr(module, name_w, w)

return original_module_forward(*args, **kwargs)

setattr(module, 'forward', forward)

This method, clearly, uses the dropout function available in torch.nn.functional to perform the dropping of the weights.

I wasn’t able to find the actual implementation of that dropout function, but I assume it is correct as it is widely used. In the Dropout Paper the authors mention differences in the two phases of training and testing. During training, they drop the activations randomly, which I’m sure the dropout implementation has worked out correctly, and during testing, they multiply the weights in the connecting layer, by p, so that the expected value of the activations is same in the next layer as it was in training, which makes sense. I assume that Dropout does exactly that when it takes ‘module.training’ as an argument, to decide what to do.

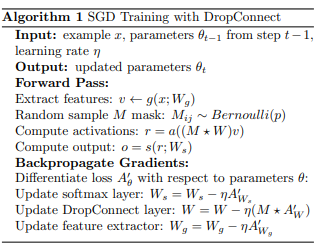

For DropConnect, however, the authors mention the following algorithms for training and testing:

Basically, the training part is the same as Dropout, in that here the weights are dropped, just like Dropout. But, during inference, the process changes. The DropConnect paper describes that ‘averaging’ the values by multiplying the weights with p in case of Dropout, is not justified mathematically as the averaging is done before applying the activation functions.

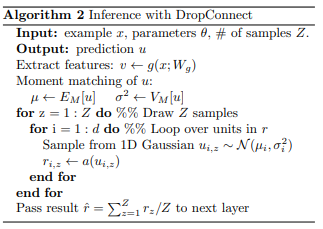

So, for DropConnect paper does this: take the values of the activations, just before the DropConnect weights. Finds the mean and variance of the next layer values, based on W,p and the inputs. Draws Z (could be a hyperparameter as far as I understand) samples from the distribution, assuming it is a Gaussian, to create lots (Z) of possible values. Applies activations on all of those values, and then finally average over Z to get the input for the next weights/layer (softmax in their case).

Since, the original Dropout doesn’t consider this, and if that is the way the implementation for PyTorch Dropout is, then essentially, the DropConnect implementation linked above must be ‘wrong’ (not what they explained in the paper).

I am not sure why DropConnect hasn’t gained much traction here or in DL research, but can someone please explain whether the above implementation is right or wrong?

And, in case it is indeed wrong, what can I do to implement DropConnect correctly?