Weights of twenty or so images in my set

['background','Bottom','Sample','Top','Image_Padding']

[1.20993261 5.05289129 0.89530055 2.64417995 0.40314961]

[1.95951562 5.56569002 0.95325091 1.28125122 0.40314961]

[1.41363244 3.76319265 0.80029308 0.76191362 0.68266667]

[1.00538467 4.79064327 0.96426102 3.5831602 0.40314961]

[1.3636288 4.0947204 0.7730125 0.79116316 0.68266667]

[1.44384226 3.83812592 0.80774019 0.74405086 0.68266667]

[1.02882261 5.09017476 0.93776919 3.51211147 0.40314961]

[1.98985881 5.06656359 0.91549906 1.37492919 0.40314961]

[0.61504387 2.0393963 2.84567955 3.49339019 0.44521739]

[6.75628866 2.06055652 3.61677704 0.54226966 0.44521739]

[7.59397451 2.08216044 3.51776704 0.53830547 0.44521739]

[0.98240144 4.45520054 0.99986269 3.60980446 0.40314961]

[5.47502089 1.88918997 3.24194905 0.57687602 0.44521739]

[6.97933972 2.16005274 3.43120419 0.53872585 0.44521739]

[0.6123143 2.04448604 2.81210041 3.62277501 0.44521739]

[1.88837343 5.18071146 0.9027619 1.45087447 0.40314961]

[2.07786937 5.73368329 1.01018882 1.14423396 0.40314961]

[1.31783632 4.10755249 0.75532761 0.82716143 0.68266667]

[1.94209513 5.39835255 0.93864222 1.32623697 0.40314961]

[3.23395016 2.20178061 2.68150573 0.61820583 0.44521739]

[1.35643175 4.07942733 0.77986553 0.7870774 0.68266667]

[5.92014453 1.87245714 2.9721542 0.58326807 0.44521739]

[1.44003516 4.23222473 0.75786065 0.77824486 0.68266667]

[0.61919879 1.9070566 3.04323195 3.49711846 0.44521739]

[0.59790165 2.2189267 2.80547945 3.64595271 0.44521739]

[1.37579511 3.78055956 0.74894006 0.82742251 0.68266667]

[2.22043029 5.73368329 1.00054962 1.11693225 0.40314961]

[7.11574376 2.00599939 3.35051125 0.55053763 0.44521739]

[6.67712685 2.041938 3.1682862 0.55593163 0.44521739]

[0.99402396 4.71821454 0.97328284 3.64798219 0.40314961]

[4.90538922 1.88132625 2.72896107 0.60530156 0.44521739]

[0.66483388 1.87809142 2.62827351 2.96878822 0.44521739]

[0.61756502 2.00415902 2.91465421 3.41778357 0.44521739]

[0.62349919 1.93979577 2.94676259 3.38774877 0.44521739]

[1.38305371 3.80139211 0.76789501 0.80205605 0.68266667]

[1.27093959 3.83027469 0.74753051 0.86992766 0.68266667]

Average weight = [2.46308995 3.45946254 1.83708698 1.69510257 0.49172566]

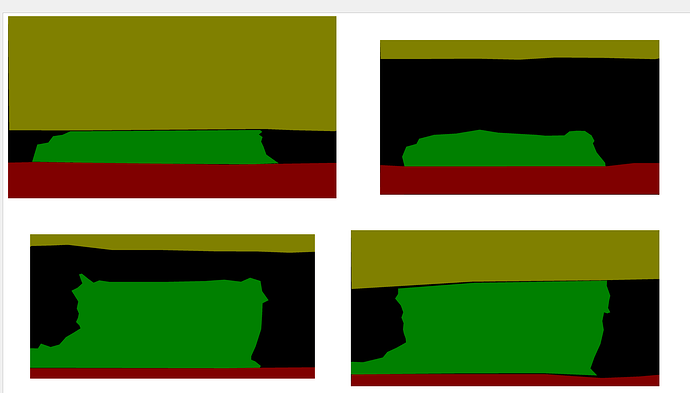

As you can see there is quite a bit of variance in the images. My loss values is around 0.1 after 10 epochs. I am using a batch size of 1 at the moment. While my scene is simple I basically only have black or white in terms of color, so I would not even call it gray scale. The code has no issue separating background from foreground but dividing up the foreground seems to be too much. I usually get an image back with one class making up all of the pixel calls. I can certainly bulk up my data set which is small at ~30 images. I am not sure if that will get me over the top though. I am using a Unet++ architecture with cross entropy error and ADAM optimizer.

Any suggestion would be much appreciated

The bottom (red) will always have that orientation but the green and yellow can vary a lot in the data set, which I think is the problem. In the actual image they are a one color blob.