I’m currently using PyTorch for working on a crowd density analysis application and I’m very interested in analysing, in real-time, the video captured using a camera-equipped drone. How does one go about doing this? Sorry if this isn’t the most appropriate forum for this question!

Which micro controller are you using in your drone?

Depending on this, it could be better to export your Pytorch model with onnx to another framework, e.g. Caffe2.

Thanks for the helpful reply! I have an Arduino UNO microcontroller to control my drone

I’m not familiar with Arduino, but you could try to build Pytorch from source.

What kind of use case do you have in mind?

I noticed in one of your answers you talk about running PyTorch on Raspberry Pi 3; I might use that! The specific use case is that I want to run my model real-time on top of a drone (w camera) and observe the results on my computer.

Sure, you could try that.

Let me know, if you got stuck compiling it.

May I know what your use case is?

Real-time on image data on an embedded platform could be tricky

I suggest you the use of opencv in case you need to work with rt video in a raspberry. Adrian Rosebrock has a complete blog about it https://www.pyimagesearch.com

We are working on making pytorch models run on microcontrollers including arduino family. Let us know, if you want to collaborate.

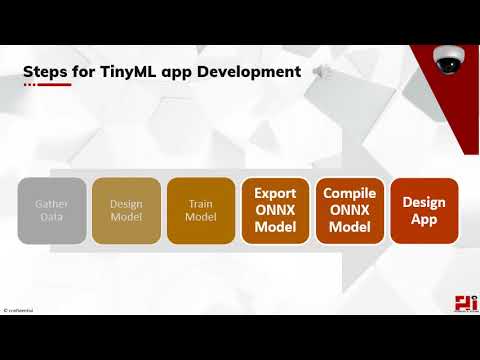

Check out deepC compiler and arduino library for an easy approach to put pytorch & onnx models into Arduino micro-controller applications including drone.

@srohit0 Is there a way to use your compiler for a quantized pytorch model?

@ptrblck: I have quantized my pytorch model using post training quantization. Now, I want to export with ONNX and I see that ONNX does not support quantized models. Is there any other alternative you know? I am aiming to deploy my model to Arduino.

@srohit0 Is there a way to use your compiler for a quantized pytorch model?

Of course @mohaimen.

Use https://pytorch.org/tutorials/advanced/static_quantization_tutorial.html#post-training-static-quantization to quantize the model before exporting and you’re all set.

Now, you can use http://tinyml.studio/ without downloading and installing deepC.

@mohaimen @srohit0 I tried using quant wrapper for a pretrained faster RCNN model, the model size reduced significantly but the speed didn’t.

Could you tell if there are better ways for pre-trained model quantization?

@kamathis4 I tried using deepC last year ago and I could not make it work. At that time, it did not support quantised models. Finally, what I did was building the same model in tensorflow (TF), quantize the model using TF Lite and then deploy the model in Sony Spresense using TF Lite Micro. The TF Lite quantization reduced the model accuracy significantly (about 14%).

Therefore, the answer to your question is Yes. There are different frameworks in the market, and found TF was the only matured framework that would let you deploy something on microcontrollers. Having said that, there is always option to build your nets in C/C++ which can directly be ported to the microcontrollers. You just need some programming skills.

Hi folks,

Has anyone had success putting the PyTorch model on an Arduino yet? I would like to put my trained DETR model onto an Arduino (WAN 1310). If anyone has had any success and would not mind sharing the details with me, that would be great.

Thanks.

Also here is a link to a Deep Learning discussion group in Discord.

hey come check out Discord with me Deep Learning and Microcontrollers