I am wondering the relationship between TorchScript and the newly introduced CUDA Graph integration with PyTorch. I tried to use CUDA Graph to accelerate my code, which is traced already, and I observe no speedup in my experiments. The trace between the two settings are almost the same. Is TorchScript compatible with CUDA Graph?

Yes, you can use CUDA graphs on a scripted model.

Are you seeing any performance benefits on the standard model (i.e. before scripting)?

As is explained in the docs, small workloads (e.g. via small batch sizes) would see the largest benefit in CUDA graphs.

Thank you for your help. I haven’t got a chance to compare the performance with the standard model, but I did make some experiments w.r.t. small batch sizes, ranging from 8 to 64. When my batch size equals to 8, I observed a tiny improvement of about 2% in end to end latency, and such improvement diminishes when the batch size grows larger than 32. The improvement is so small that I don’t know whether it is just deviation or some real improvements.

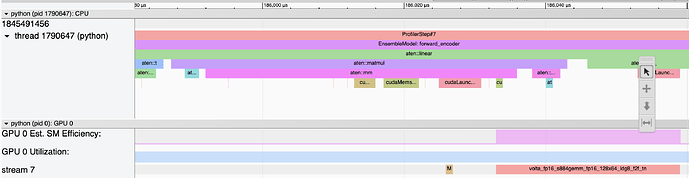

What makes me more confusing is the trace I collected from my experiments. Here is a screenshot for one the trace I collected. I thought the CUDA graph will bring all the cudaLaunchKernel runtimes together to the very beginning of the model, but from the trace it seems like this is not the case. Each of the operators still has their own cudaLaunchKernel calls, and it takes a while for the process to launch these kernels. I’m happy to provide you with more information regarding the raw trace files if you need them.