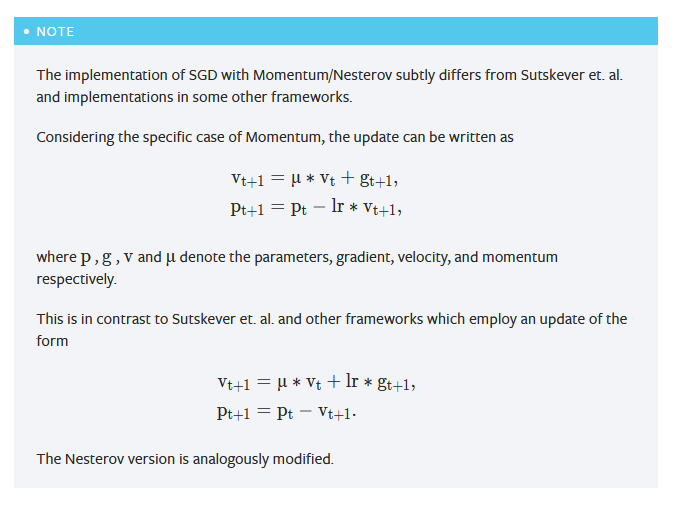

I set the gradient to zero (I tried both via hook and via parameter param.grad) and when I output the gradient, it does say 0, but the value of the parameters during training changes minimally in strange way. I set values to 1 and then during training all the weights go steadily down by 0.0001, that is 1, 0.9999, 0.9998, etc (below it is after a few iteration). What does such a behavior mean, and how to make sure the parameters don’t change?

gradient:

tensor([[[0., 0., 0., 0., 0.],

[0., 0., 0., 0., 0.],

[0., 0., 0., 0., 0.],

[0., 0., 0., 0., 0.],

[0., 0., 0., 0., 0.]]], device=‘cuda:0’)

weights:

tensor([[[0.9967, 0.9967, 0.9967, 0.9967, 0.9967],

[0.9967, 0.9967, 0.9967, 0.9967, 0.9967],

[0.9967, 0.9967, 0.9967, 0.9967, 0.9967],

[0.9967, 0.9967, 0.9967, 0.9967, 0.9967],

[0.9967, 0.9967, 0.9967, 0.9967, 0.9967]]], device=‘cuda:0’)