Hi, I’m using torch.distributed.isend and torch.distributed.irecv for asynchronized communication with NCCL backend.

I assumed isend and irecv will happen on a same cuda stream without specifying a nccl group:

for _ in range(16):

work = torch.distributed.irecv(input, prev_rank)

...

work.wait()

output = sub_model(input)

torch.distributed.isend(output, next_rank)

...

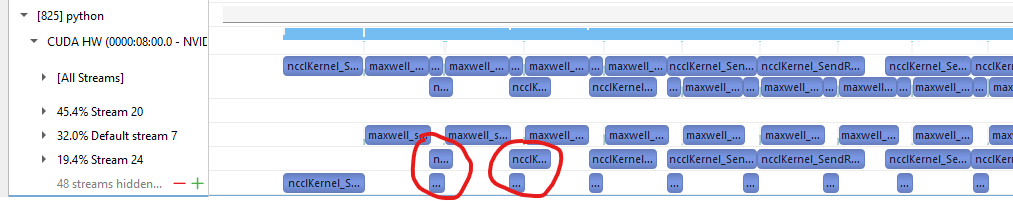

But I found they actually happen on two streams and got nsys profiled results like this:

Note this is one of the devices, where default stream 7 is performing computation and red circles are performing send and recv kernel. Is this expected?

Docker image: nvcr.io/nvidia/pytorch:21.10-py3

PyTorch version: 1.10.0a0+0aef44c