I HAVE the model code as:

class RNN(nn.Module):

“”“RNN module(cell type lstm or gru)”“”

def init(

self,

input_size,

hid_size,

num_rnn_layers=1,

dropout_p = 0.2,

bidirectional = False,

rnn_type = ‘lstm’,

):

super().init()

if rnn_type == 'lstm':

self.rnn_layer = nn.LSTM(

input_size=input_size,

hidden_size=hid_size,

num_layers=num_rnn_layers,

dropout=dropout_p if num_rnn_layers>1 else 0,

bidirectional=bidirectional,

batch_first=True,

)

else:

self.rnn_layer = nn.GRU(

input_size=input_size,

hidden_size=hid_size,

num_layers=num_rnn_layers,

dropout=dropout_p if num_rnn_layers>1 else 0,

bidirectional=bidirectional,

batch_first=True,

)

def forward(self, input):

outputs, hidden_states = self.rnn_layer(input)

return outputs, hidden_states

class RNNModel(nn.Module):

def init(

self,

input_size,

hid_size,

rnn_type,

bidirectional,

n_classes=5,

kernel_size=5,

):

super().init()

self.rnn_layer = RNN(

input_size=46,#hid_size * 2 if bidirectional else hid_size,

hid_size=hid_size,

rnn_type=rnn_type,

bidirectional=bidirectional

)

self.conv1 = ConvNormPool(

input_size=input_size,

hidden_size=hid_size,

kernel_size=kernel_size,

)

self.conv2 = ConvNormPool(

input_size=hid_size,

hidden_size=hid_size,

kernel_size=kernel_size,

)

self.avgpool = nn.AdaptiveAvgPool1d((1))

self.fc = nn.Linear(in_features=hid_size, out_features=n_classes)

def forward(self, input):

print("shape")

print(input.shape) # **shape isshape torch.Size([32, 1, 187])**

x = self.conv1(input)

x = self.conv2(x)

x, _ = self.rnn_layer(x)

x = self.avgpool(x)

x = x.view(-1, x.size(1) * x.size(2))

x = F.softmax(self.fc(x), dim=1)#.squeeze(1)

return x

when I create ![]()

m1=RNNModel(1, 64, ‘lstm’, True).to(device)

and run

#from torchsummary import summary

summary(m1,(1,187),32)

The following error raises:

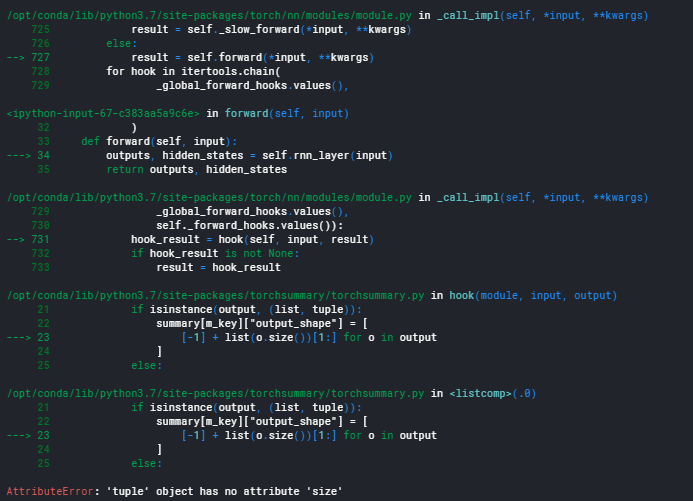

AttributeError Traceback (most recent call last)

in

1 #from torchsummary import summary

----> 2 summary(m1,(1,187),32)

/opt/conda/lib/python3.7/site-packages/torchsummary/torchsummary.py in summary(model, input_size, batch_size, device)

70 # make a forward pass

71 # print(x.shape)

—> 72 model(*x)

73

74 # remove these hooks

/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs)

725 result = self._slow_forward(*input, **kwargs)

726 else:

→ 727 result = self.forward(*input, **kwargs)

728 for hook in itertools.chain(

729 _global_forward_hooks.values(),

in forward(self, input)

35 x = self.conv1(input)

36 x = self.conv2(x)

—> 37 x, _ = self.rnn_layer(x)

38 x = self.avgpool(x)

39 x = x.view(-1, x.size(1) * x.size(2))

/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs)

725 result = self._slow_forward(*input, **kwargs)

726 else:

→ 727 result = self.forward(*input, **kwargs)

728 for hook in itertools.chain(

729 _global_forward_hooks.values(),

in forward(self, input)

32 )

33 def forward(self, input):

—> 34 outputs, hidden_states = self.rnn_layer(input)

35 return outputs, hidden_states

/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs)

729 _global_forward_hooks.values(),

730 self._forward_hooks.values()):

→ 731 hook_result = hook(self, input, result)

732 if hook_result is not None:

733 result = hook_result

/opt/conda/lib/python3.7/site-packages/torchsummary/torchsummary.py in hook(module, input, output)

21 if isinstance(output, (list, tuple)):

22 summary[m_key][“output_shape”] = [

—> 23 [-1] + list(o.size())[1:] for o in output

24 ]

25 else:

/opt/conda/lib/python3.7/site-packages/torchsummary/torchsummary.py in (.0)

21 if isinstance(output, (list, tuple)):

22 summary[m_key][“output_shape”] = [

—> 23 [-1] + list(o.size())[1:] for o in output

24 ]

25 else:

AttributeError: ‘tuple’ object has no attribute ‘size’

in frwd layer i have commented i/p shape also shape isshape torch.Size([32, 1, 187])**

Batch size is 32

Why is this so??Pls help