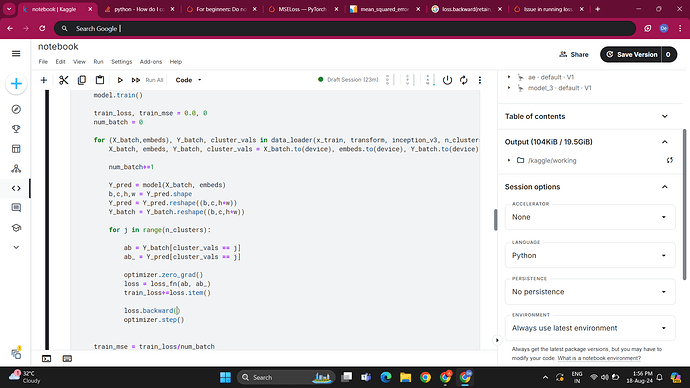

i am clustering the target image into k clusters and i want pixels of my predicted image to learn those cluster values.

for that i am running loop for each cluster in range(k), and then i am computing mse loss for all those pixels that belongs to same cluster. since i am using a batch size of 8 images so it is computed across whole batch for each cluster.

but then i am running into the following issue:

RuntimeError: Trying to backward through the graph a second time (or directly access saved tensors after they have already been freed). Saved intermediate values of the graph are freed when you call .backward() or autograd.grad(). Specify retain_graph=True if you need to backward through the graph a second time or if you need to access saved tensors after calling backward.

whereas i just want all the losses obtained from each cluster to get accumulated and then run backprop.

kindly help me in this issue.